Looking for packets from three particular subnets

Chris Mohan --- Internet Storm Center Handler on Duty

Attack on Yahoo mail accounts

Chris Mohan --- Internet Storm Center Handler on Duty

0 Comments

New gTLDs appearing in the root zone

Over the last month or so, new gTLDs (generic top level domains) have been added to the root zone by ICANN. This is the beginning of a process of adding a couple hundred new gTLDs which ICANN colleted applications for last year.

To get a full list of current valid gTLDs see http://newgtlds.icann.org/en/program-status/delegated-strings

It is up to the individual registrars who received the gTLDs to decide how to use them. Some are limited to particular organizations. Others are already available to the public for pre-registration.

This is important if you are doing more detailed input validation on domain names, for example to validate e-mail addresses. For example, the longest name I was able to spot was ".INTERNATIONAL" .

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

2 Comments

IPv6 and isc.sans.edu (Update)

About 4 years ago, I published a quick diary summarizing our experience with IPv6 at the time [1]. Back then, the IPv6 traffic to our site was miniscule. 1.3% of clients connecting to our server used IPv6. Since then, a lot has changed in IPv6. Comcast, one of the largest US ISPs and an IPv6 pioneer now offers IPv6 to more then 25% of its users [2] . Many mobile providers enable IPv6, and more users access our site from mobile devices then before. So I expected a bit of an increase in IPv6 traffic. Lets see what I found.

The short summary is: We do see A LOT more IPv6 traffic, but auto-configured tunnels pretty much went away (probably a good thing)

Overall, the number of IPv6 clients multiplied by about 3 and about 4% of requests received by our web server now arrive via IPv6. Given that we use a tunnel and proxy at this point to provide IPv6 access, we can only assume that there are more IPv6 capable clients out there but technologies like "happy eyeballs" make them prefer IPv4.

The difference is even more significant looking at tunnels. 6-to-4 tunnels only make up 0.3 % of all IPv6 requests, and Terredo is not significant (only about 100 requests total for all of last month). 2001::/16 remains the most popular /16 prefix, but 2002::/16 which was #2 in 2010 no longer shows up.

Within 2001::/16, Hurricane Electric (2001:470::/32) still dominates, indicating that we still have a lot of tunnels. But it is now followed by 2607:f740::/32 (Host Virtual) , 2401:c900::/32 (Softlayer) , 2a01:7a0::/32 (Velia) and 2607:f128::/32 (Steadfast).

As far as reverse DNS resolution goes, still only very few ISPs appear to have it configured for IPv6.

[1] https://isc.sans.edu/diary/IPv6+and+isc.sans.org/7948

[2] http://www.comcast6.net

and of course our IPv6 Security Essentials class.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

1 Comments

Oracle Reports Vulnerability

I mentioned this vulnerability earlier this week in a podcast, but believe it deserves a bit more attention, in particular as exploits are now public, and a metasploit module appears in the works.

Dana Taylor (NI @root) released details about the vulnerabilities first in her blog [1]. The post included quite a bit of details about respecitve vulnerabilities. Extended support for Oracle 10g ended July 2013 and a patch is not expected.

If for some reason you are still running Oracle 10g or earlier, please check on possible workarounds or upgrade to 11g

The vulnerabilities were assigned following CVE numbers

CVE-2012-3153 - PARSEQUERY keymap vulnerabiilty

Oracle details (requires login): https://support.oracle.com/rs?type=doc&id=279683.1

CVE-2012-3152 - URLPARAMETER code execution

Please let us know if you have any workarounds to share, or if you have any logs showing exploit attempts.

[1] http://netinfiltration.com

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

4 Comments

How to Debug DKIM

DKIM is one way to make it easier for other servers to figure out if an e-mail sent on behalf of your domain is spoofed. Your mail server will add a digital signature to each email authenticating the source. This isn't as good a signing the entire e-mail, but it is a useful tool to at least validate the domain used as part of the "From" header.

The problem is that DKIM can be tricky to debug. If you have mail rejected, it is useful to be able to manually verify what went wrong. For example, you may have different keys, and the wrong key was used, which is one of the trickier issues to debug.

Lets start with the basics: first make sure the e-mail you send is actually signed. Look for the "DKIM-Signature" header:

DKIM-Signature: v=1; a=rsa-sha256; c=relaxed/simple; d=dshield.org;

s=default; t=1391023518;

bh=wu4x1KKZCyCgkXxuZDq++7322im11hlsCET+KxQ9+48=;

h=To:Subject:Date:From;

b=wVZQsIvZQe0i2YuhFNeUrpfet0wa7cIcwZ8LR9izWuF1E1NDQmpKUImCHO/RlPgYJ

wruW1IunQWRXtd4MQMuUZNsU1rGFzsYXoC4T6rVjHonQtQgoFSoEfo90KtZTC2riev

There are a couple of important pieces to look for:

- d=dshield.org - this is the domain for which the signature is good for

- s=default - this can be used to define different keys.

Using these two values, we can retrieve the public keys from DNS:

"v=DKIM1\; k=rsa\; p=MIGfMA0G...AQAB"

At this point we know which key was used to sign the headers, and we got the public key to verify it. You probably already spotted the algorithm used to sign the header: "a=rsa-sha256".

DKIM only signs specific headers. In our case, we signed the To, Subject, Date and From headers which can be learned from the "h=..." field above.

For the sample e-mail above, these headers are:

To: jullrich@euclidian.com

Subject: Testing DKIM

Date: Wed, 29 Jan 2014 19:25:18 +0000 (UTC)

From: jullrich@dshield.org (Johannes Ullrich)

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

0 Comments

Log Parsing with Mandiant Highlighter (1)

Reading log isn’t the most enjoyable thing in Network/Security Analysis, sometimes it’s impossible to get something useful from log without using a log parser .In this diary I am going to talk about one of my best log analysis tool.

MANDIANT HIGHLIGHTER

“MANDIANT Highlighter is a log file analysis tool. Highlighter provides a graphical component to log analysis that helps the analyst identify patterns. Highlighter also provides a number of features aimed at providing the analyst with mechanisms to weed through irrelevant data and pinpoint relevant data.”[i]

Installation:

1-Download Mandiant Highlighter from https://www.mandiant.com/resources/download/highlighter

2- Launch MandiantHighlighter1.1.3 and click Next

Highlighter Usage

Now let’s have some examples of using Mandiant Highlighter:

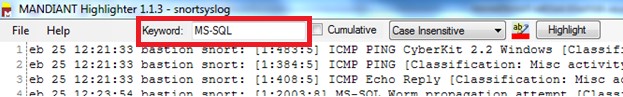

Let’s say that you have a snort log file and you would like to check for all MS-SQL related alerts:

- Go to File menu and select Open file.

2-open snortsyslog

3-Type MS-SQL in the keyword field

4-Click on Highlight ,Now Highlighter will highlights MS-SQL in the snortsyslog

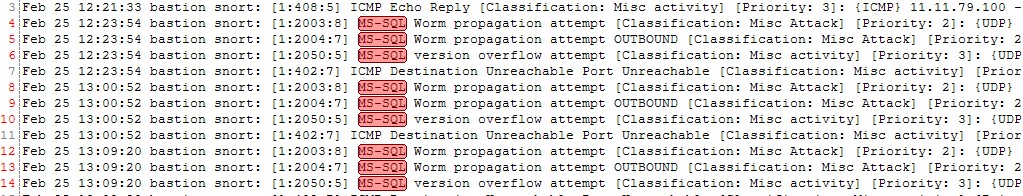

6-If you would like to filter the snortsyslog just to display MS-SQL related alerts:

- Highlight MS-SQL

- Right-click and select “Show Only”

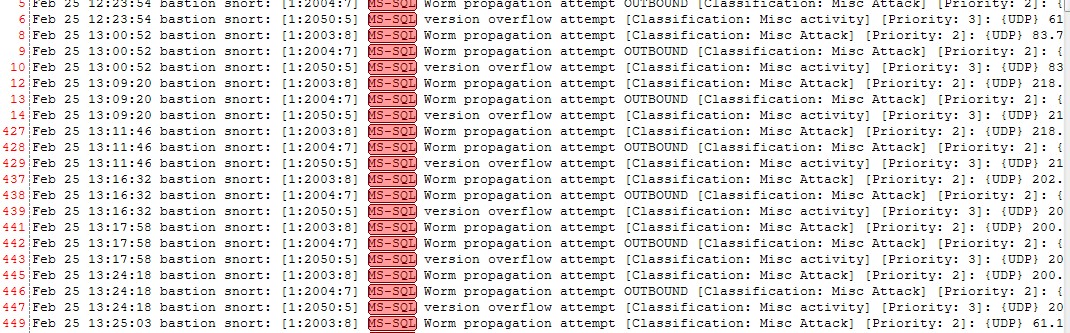

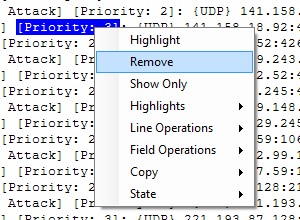

7-Now let say that you are not interested in Priority:3 events

a)right click on Priority: 3

b)Select Remove

In the next diary I will discuss some other advance options in Mandiant Highlighter

[i] Mandiant High-lighter User Guide.

2 Comments

Looking for Packets for IP address 71.6.165.200

The DShield database this morning show a tremendous uptick in activity coming out of IP address 71.6.165.200 over the past few weeks, so I am reaching out to everyone to see if anybody has packets related to this IP address. The WHOIS shows a newly registered IP block to CariNet, Inc., a San Diego based cloud provider, on January 3 2014. Since that time there has been an upshot in reports to the DShield database for both unwanted TCP and UDP packets.

If anybody has information on the IP address 71.6.165.200, or a POC at CariNet, would greatly help. I will contact the abuse department on Monday with whatever information I can collect today.

As always, thanx for supporting the Internet Storm Center,

tony d0t Carothers –gmail.com

==============================

UPDATE: 27 January 2014

The senior security engineer onsite has contacted the customer, who has agreed to take down the site and work with the ISC to resolve these issues. Great job everyone!! A community effort helps out the community everytime!!

22 Comments

Finding in Cisco's Annual Security Report

The report highlight the fact that now "[...] the cybercrime network has become so mature, far-reaching, well-funded, and highly effective as a business operation that very little in the cybersecurity world can—or should—be trusted without verification."[1]

I don't think this is really a huge surprise. However, the report identifies three attack methods that are of concerns: 99% of all mobile malware in 2013 targeted Android devices, 91% of web exploit targeted Java and last is 64% of malware are Trojans.[2] Taking this into account, if you own an Android device, you need to be vigilant about the content you view or access. That doesn't exclude other mobile devices from being a target.

The attack surface is no longer limited to just PCs and servers but to any mobile devices. They have been growing in numbers at a rapid pace and need to be part of all enterprise security models. This change in the security landscape means securing a network is even more difficult now because the front door isn't just the Internet gateway your network is connected too. It now includes all the mobile devices accessing your network either via a wireless AP or directly attached via a USB cable or Bluetooth to a PC or laptop. If you want to take a look at the survey, it can be downloaded here (need to register). Now I encourage you to take part in our survey about What is going to trouble you the most in 2014?

[1] http://blogs.cisco.com/security/cisco-2014-annual-security-report-trust-still-has-a-fighting-chance

[2] http://www.cisco.com/web/offers/lp/2014-annual-security-report/preview.html

[3] http://www.cisco.com/web/offers/lp/2014-annual-security-report/index.html

-----------

Guy Bruneau IPSS Inc. gbruneau at isc dot sans dot edu

2 Comments

Phishing via Social Media

Chris Mohan --- Internet Storm Center Handler on Duty

1 Comments

How to send mass e-mail the right way

We all don't like spam, but sometimes, there are good reasons to send large amounts of automatically created e-mails. Order confirmations, newsletters or similar services. Sadly, I often see how it is done wrong, and would like to propose some rules how to send mass e-mails correctly.

The risks of doing it incorrectly are two fold: Your e-mail will get caught in spam filters, or your e-mail will teach users to fall for phishing, endangering your brand.

So here are some of the rules:

- Always use an address as "From" address that is within your domain. Even if you use a third party to send the e-mail. They can still use your domain if you set them up correctly. If necessary, use a subdomain ("mail.example.com" vs "example.com").

- Use DKIM and or SPF to label the e-mail as coming from a source authorized to send e-mail on your behalf. DKIM can be a bit challenging if a third party is involved, but SPF should be doable. Using a subdomain as From address can make it easier to configure this. For extra credit, use full DMARC to setup e-mail addresses to receive reports about delivery issues.

- Use URLs only if you have to, and if you do, don't "obscure" them by making them look like they link to a different location then they actually do. Use links to your primary domain (subdomain as a work around).

- Try to keep them "plain text", but if you have to use HTML markup, make sure it matches the look and feel of your primary site well. You don't want the fake e-mail to look better then your real e-mail.

- watch for bounces, and process them to either remove dead e-mail addresses or find our about configuration issues or spam blocklisting quickly.

Of course, I would like to see more digitally signed e-mail, but I think nobody really cares about that.

Any other ideas?

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

4 Comments

Learning from the breaches that happens to others Part 2

Chris Mohan --- Internet Storm Center Handler on Duty

1 Comments

Learning from the breaches that happens to others

Chris Mohan --- Internet Storm Center Handler on Duty

4 Comments

Taking care when publishing Citrix services inside the corporate network or to the Internet

Citrix has some interesting products like XenApp, which allow people to access corporate application from tablets, Windows Terminals and also Windows servers and PC. Depending on how are you using them, you might be creating vulnerabilities to your information assets.

- If you are using it inside the corporate network, it will use Pass-Through authentication with your windows domain authentication protocol. If you already have kerberos, you have nothing to worry about. You should not have any (NT)LM hash circulating through your network.

- If you are using Citrix on the Internet, it is published in a IIS Web Server. Implementations can be done using username/password authentication or username/password/One Time Password. Unfortunately, many companies still believe that having an extra authentication factor is too expensive and difficult to handle, including the misconception of "I will never have my identity stealed".

Let's talk about published applications on Citrix with no extra authentication factor in place, which corresponds to the majority of cases. Since people tend to use mobile devices these days and also when they are big bosses in the company they want to handle their information in the most easy way, most of them requires IT to publish the ERP payments module, because they can authorize them from any place in any situation that allows them to have two minutes to perform the operation.

If the company happens to handle lots and lots of money, attackers might talk to any inside employee willing to have some extra money. First thing to do is to determine if the Citrix Farm linked to the Citrix Access Gateway where the user is being authenticated publishes the ERP Payment Application. How can you you do that? you can use the citrix-enum-apps nmap script. The syntax follows:

nmap -sU --script=citrix-enum-apps -p 1604 citrix-server-ip

If the attacker gets an output like the following, the company could be definitely in big problems:

Starting Nmap 6.40 ( http://nmap.org ) at 2014-01-21 17:38 Hora est. Pacífico, Sudamérica

Nmap scan report for hackme-server (192.168.0.40)

Host is up (0.0080s latency).

rDNS record for 192.168.0.40: hackme-server.vulnerable-implementation.org

PORT STATE SERVICE

1604/udp open unknown

| OW ERP8 Payroll

| OW ERP8 Provider payments

| Internet Explorer

| AD Users and Computers

Nmap done: 1 IP address (1 host up) scanned in 15.76 seconds

Bingo! Provider payments is being published. All we need to do is perform good-old-man-in-the-middle to the IIS Server and we will have a username/password to generate random payments.

How can you remediate this situation?

- Using username/password authentication it's definitely a BAD idea. Extra authentication factors needs to be placed and specially for users with critical privileges.

- Configure your mobile clients to accept the specific server certificates and instruct them to interrupt any connection that shows a certificate error.

- Ensure that Citrix Access Gateway server is the only one allowed to contact to the Citrix Server via UDP port 1604 and also that Citrix Farm is not accessible to the Internet or the corporate Network.

Manuel Humberto Santander Peláez

SANS Internet Storm Center - Handler

Twitter:@manuelsantander

Web:http://manuel.santander.name

e-mail: msantand at isc dot sans dot org

5 Comments

You Can Run, but You Can't Hide (SSH and other open services)

In any study of internet traffic, you'll notice that one of the top activities of attackers is to mount port scans looking for open SSH servers, usually followed by sustained brute-force attacks. On customer machines that I've worked on, anything with an open port tcp/22 for SSH, SFTP (FTP over SSH) or SCP will have so many login attempts on these ports that using the system logs to troubleshoot any other problem on the system can become very difficult.

We've written this up many times, and often one of the initial things people do - thinking that this will protect them - is move their SSH service to some other port. Often people will choose 2222, 222 or some "logical" variant as their new home for SSH, this really just takes away the background noise of automated attacks, real attackers will still find your service. We've covered this phenomena and real options for protecting open services like SSH in the past (google for ssh inurl:isc.sans.edu for a list)

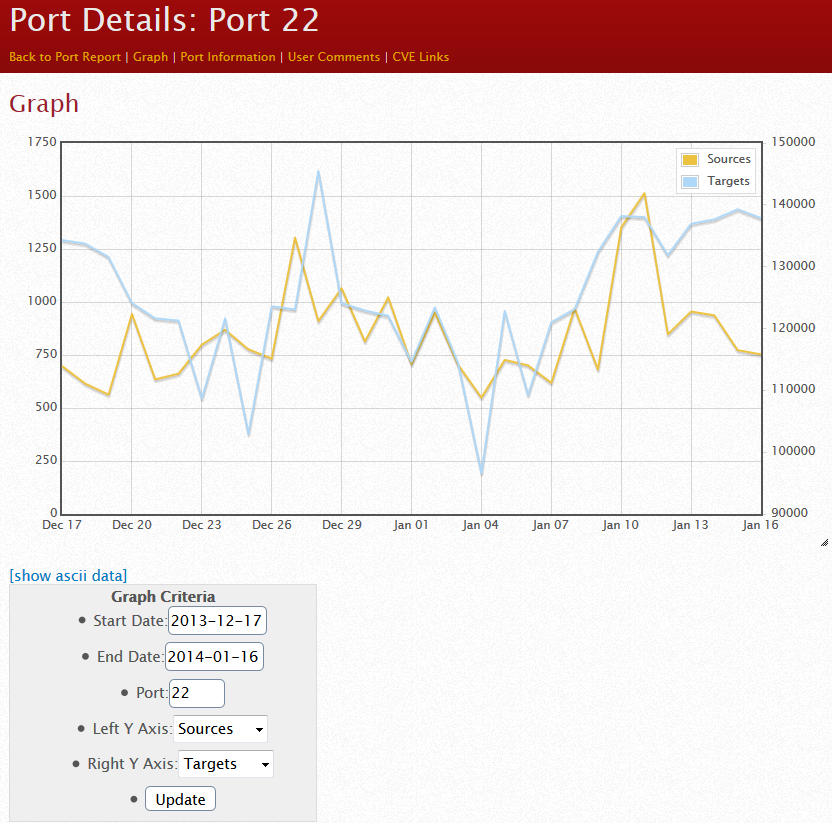

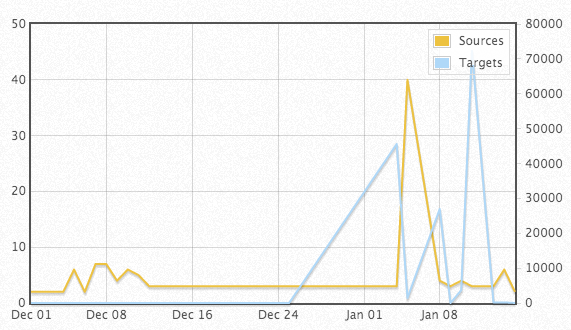

What got me thinking about this was a bit of "data mining" I did the other night in the Dshield database using the reporting interfaces on the ISC site, as well as our API. Port 22 traffic of course remains near the top of our list of ports being attacked:

https://isc.sans.edu/port.html?startdate=2013-01-01&enddate=2014-01-16&port=22&yname=sources&y2name=targets

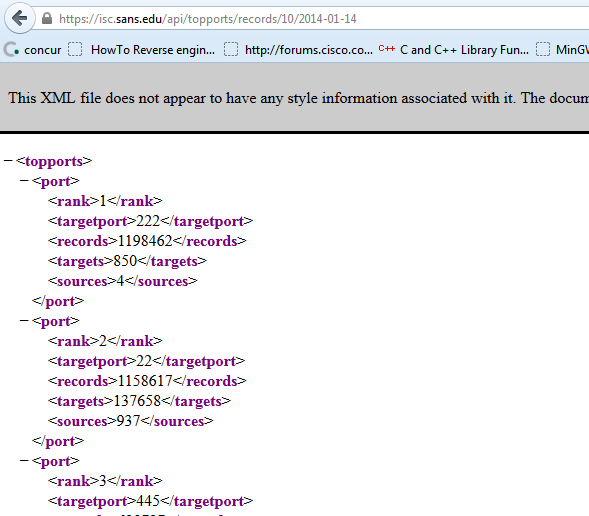

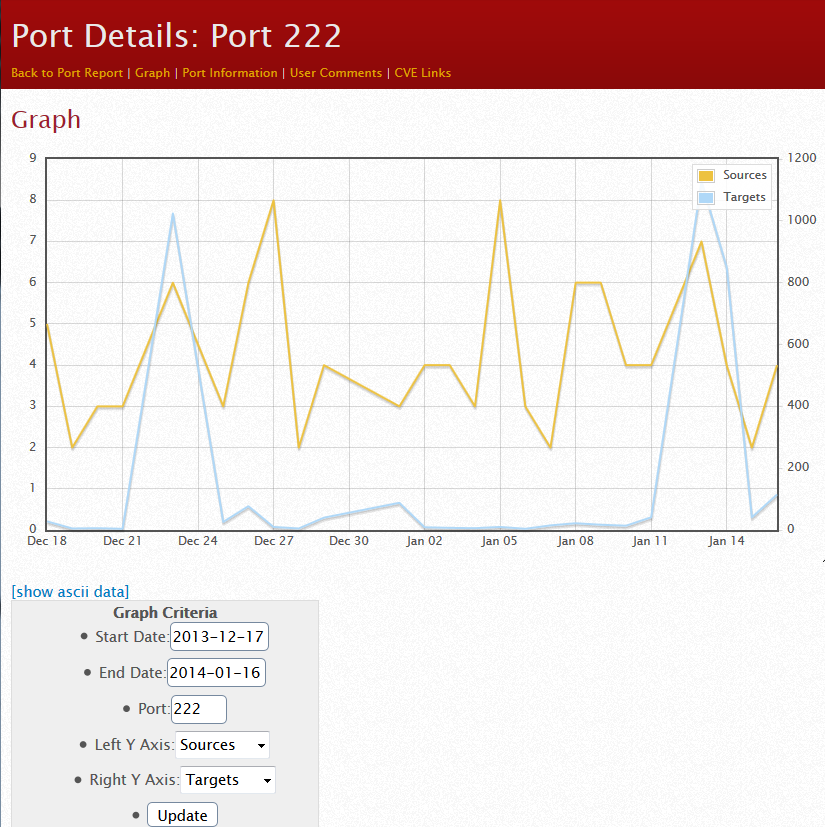

However, this past week we saw an unusual spike in TCP port 222:

https://isc.sans.edu/api/topports/records/10/2014-01-13

and

https://isc.sans.edu/api/topports/records/10/2014-01-14

On the 14th, port 222 actually topped our list. This event only lasted 2 days, and was primarily sourced by only two IP addresses. Note the other spike in December.

https://isc.sans.edu/port.html?port=222

This looked like a full internet scan for port 222, sourced from these two addresses (I won't call them out here). Looking closer at the IP's, they didn't look like anything special, in fact one was in a DSL range, so two days for a full scan is about right, given the fast scanning tools we have these days and bandwidth a home user will usually have.

The moral of the story? No matter where you move your listening TCP ports to, there is someone who is scanning that port looking for your open service. Using a "logical" approach to picking a new port number (for instance, 222 for scp or ssh, 2323 for telnet and so on) just makes the job easier for the attacker. And that's just accounting for automated tools doing indiscriminate scanning. If your organization is being targetted, a full port scan on your entire IP range takes only a few minutes to set up and will complete within an hour - even if it's on a "low and slow" timer (to avoid your IPS or to keep the log entry count down), it'll likely be done within a day or two. Moving your open service to a non-standard port is no protection at all if you are being targetted.

If you don't absolutely need to have a service on the public internet, close the port. If you need to offer the service, put it behind a VPN so that only authorized folks can get to it. And in almost all cases, you don't need that SSH port (whatever the port number is) open to the entire internet. Restricting it to a known list of IP addresses or better yet, putting it behind a VPN is by far the best way to go. If you MUST have "common target" services like SSH open to the internet, use certificates rather than or in addition to simple credentials, and consider implementing rate limiting services such as fail2ban, so that once the brute-force attacks start, you've got a method to "shun" the attacker (though neither of these measures will protect you from a basic port scan).

===============

Rob VandenBrink

Metafore

8 Comments

Anatomy of a Malware distribution campaign

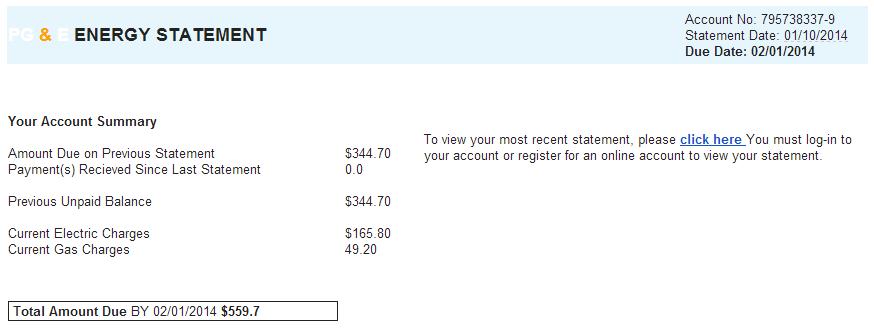

Starting about 10 days or so ago, a Spam campaign began targeting Pacific Gas and Energy (PG&E), a large U.S. energy provider. PG&E has been aware of this campaign for about a week, and has informed its customers.

This is yet another Spam run targetting the customers of U.S. energy companies that has been going on for several months. I was able to get two samples of this run to disect. This is not a campaign targetted directly at known PG&E customers One of the emails came to an account which I only use as a garbage collector. I have not used the account in about ten years and nobody would legitimately send me email on that account. The second sample came from an ISC handler in Australia. Neither of us are anywhere near PG&E's service area.

It wasn't long ago that you could identify Spam by the quality of the English, but these emails look quite professional and the English is good. The only real issue in the email being formatting of some of the currency figures.

The header revealed that it was sent from user nf@www1.nsalt.net using IP 212.2.230.181, most likely a compromised webmail account. Both the from and the reply-to fields are set to do_not_reply@nf.kg, an email address that bounces. The 212.2.230.181 IP, the nf.kg domain and the nsalt.net domain all map to City Telecom Broadband in Kyrgyzstan (country code KG).

These sorts of runs usually have one of two purposes; credential theft, or malware distribution. In this case the goal of this particular campaign seems to be malware distribution. The "click here" link in the two samples point to different places

-

hxxp://s-dream1.com/message/e2y+KAkbElUyJZk38F2gvCp7boiEKa2PSdYRj+YOvLI=/pge

-

hxxp://paskamp.nl/message/hbu8N3ny7oAVfvBZrZWLSrkYv2kTbwArk3+Tspbd2Cg=/pge

Both of these links are now down, but when they were alive they both served up PGE_FullStatement_San_Francisco_94118.zip which contained a Windows executable.

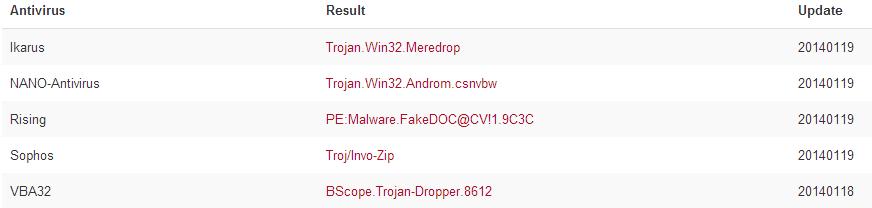

The Antivirus on my test machines were not triggered by this file and Virustotal has a 5/48 detection rate indicating this is most likely a Trojan Dropper:

I get 500 or so Spam and Phishing messages every day. Fortunately the majority of them are caught in the excellent filters I have in place. This email passed those filters and if I was a PG&E customer would probably look legitimate enough to at least make me look at it twice before disregarding it as Spam. But how many less tech-savvy PG&E customers got caught by this? It is clear that modern anti-virus is dying as a front line defense against such attacks. Is there a technology in the development pipe today that is going to step up and help protect the average user?

-- Rick Wanner - rwanner at isc dot sans dot org - http://namedeplume.blogspot.com/ - Twitter:namedeplume (Protected)

8 Comments

Massive RFI scans likely a free web app vuln scanner rather than bots

On 9 JAN, Bojan discussed reports of massive RFI scans. One of the repetitive artifacts consistent with almost all the reports we've received lately is that the attackers are attempting to include http://www.google.com/humans.txt. I investigated a hunch, and it turns out this incredibly annoying script kiddie behavior is seemingly, rather than bots, thanks to the unfortunate misuse of the beta release of Vega, the free and open source web application scanner from Subgraph.

One of the numerous Vega modules is Remote File Include Checks found in C:\Program Files (x86)\Vega\scripts\scanner\modules\injection\remote-file-include.js.

Of interest in remote-file-include.js:

var module = {

name: "Remote File Include Checks",

category: "Injection Modules"

};

function initialize(ctx) {

var ps = ctx.getPathState();

if (ps.isParametric()) {

var injectables = createInjectables(ctx);

ctx.submitMultipleAlteredRequests(handler, injectables);

}

}

function createInjectables(ctx) {

var ps = ctx.getPathState();

var injectables = ["http://www.google.com/humans.txt",

"htTp://www.google.com/humans.txt",

"hthttpttp://www.google.com/humans.txt",

"hthttp://tp://www.google.com/humans.txt",

"www.google.com/humans.txt"];

var ret = [];

for (var i = 0; i < injectables.length; i++)

ret.push(injectables[i]);

return ret;

}

Great, now the kiddies don't even need to figure out how to make RFI Scanner Bot or the VopCrew Multi Scanner work, it's been dumbed down all the way for them!

What steps can you take to prevent and detect possible successful hits?

-

Remember that the likes of Joomla and WordPress, amongst others, are favorite targets.

- If you're using add-on components/modules you're still at risk even if keeping these content management systems (CMS) or frameworks (CMF) fully up to date. As always, you're only as strong as your weakest link.

- Component/module developers are not always as diligent as the platform developers themselves; believe me when I say the Joomla team cares a great deal about the security of their offering.

- Audit add-on components/modules you have installed, see if there are any open vulnerabilities for them via https://secunia.com/advisories/search, and ensure you're utilizing the most current version.

-

Check your web site directories for any files written during or soon after scans.

- If the remote file inclusion testing proved successful, the attackers will turn right around and drop a file(s) typically.

- Such files could be a TXT, PHP, or JS file but they also like image file extensions too and will often drop them in the images directory if the vulnerability permits.

- Yours truly has been dinged by this issue; you have to remember to keep ALL related code current or kiddies will have their way with you.

- Check your logs for successful 200 (successful) responses where the humans.txt file was attempted, particularly where the GET string includes a path specific to your CMS/CMF.

-

Hopefully you see only 404 (not available) responses, but if you do see a 200 it warrants further investigation.

- 404 example entry: 192.64.114.73 - - [05/Jan/2014:18:16:13 +0800] "GET /A-Blog/navigation/search.php?navigation_end=http://www.google.com/humans.txt? HTTP/1.0" 404 927 "-" "-"

- 200 example entry: 192.64.114.73 - - [05/Jan/2014:18:29:29 +0800] "GET /configuration.php?absolute_path=http://www.google.com/humans.txt? HTTP/1.0" 200 - "-" "-"

Now that we know it's less likely bot behavior and more likely annoying miscreants, take the opportunity to audit your Internet-facing presence particularly if you use a popular CMS/CMF.

Cheers and feel free to comment or send additional log samples.

3 Comments

Port 4028 - Interesting Activity

Take a look at port 4028. Thanks to Bill for sharing an analysis that concluded a piece of malware was an Aidra botnet client. His shared analysis asks for a deeper look at port 4028. I found a published write up from Symantec. [1]

After looking at our port 4028 data [2], there is reason to watch for it. Please chime in if you are seeing any traffic on port 4028.

# portascii.html # Start Date: 2013-12-01# End Date: 2014-01-15 # Port: 4028 # created: Thu, 16 Jan 2014 01:34:07 +0000 # Date in GMT. YYYY-MM-DD format. date records targets sources tcpratio 2013-12-01 19 2 2 100 2013-12-04 18 2 2 100 2013-12-05 28 4 6 100 2013-12-06 8 2 2 100 2013-12-07 13 5 7 85 2013-12-08 9 5 7 67 2013-12-09 13 3 4 100 2013-12-10 23 5 6 100 2013-12-11 5 3 5 80 2013-12-12 19 3 3 100 2013-12-23 4 2 3 100 2013-12-25 6 2 3 100 2014-01-04 49240 45589 3 100 2014-01-05 1559 1440 40 100 2014-01-08 28910 26975 4 100 2014-01-09 6 6 3 83 2014-01-10 4531 3675 4 100 2014-01-11 76271 72307 3 100 2014-01-13 239 173 3 100 2014-01-14 195 164 6 99 2014-01-15 10 5 2 90 # (c) SANS Inst. / DShield. some rights reserved. # Creative Commons ShareAlike License 2.5 # http://creativecommons.org/licenses/by-nc-sa/2.5/

[1] http://www.symantec.com/security_response/writeup.jsp?docid=2013-121118-5758-99

[2] https://isc.sans.edu/port.html?&startdate=2013-12-17&enddate=2014-01-16&port=4028&yname=sources&y2name=targets

0 Comments

Oracle Critical Patch Update January 2014

Today we also got Oracle's quarterly "Critical Patch Update". As announced, we got a gross or 144 different patches from Oracle. But remember that these patches affect 47 different products (if I counted right).

The product we are overall most worried about is Java. With this CPU, 34 security vulnerabilities are fixed in Java SE. So again: Patch or disable (fast).

http://www.oracle.com/technetwork/topics/security/cpujan2014-1972949.html

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

1 Comments

Adobe Patch Tuesday January 2014

Adobe released two bulletins today:

1 - Reader/Acrobat

This bulletin fixes three vulnerabilities. Adobe rates this one "Priority 1" meaning that these vulnerabilities are already exploited in targeted attacks and administrators should patch ASAP.

After the patch is applied, you should be running Acrobat/Reader 11.0.06 or 10.1.9 .

2 - Flash Player and Air

The flash player patch fixes two vulnerabilities. The Flash player problem is rated "Priority 1" for Windows and OS X. The Air vulnerability is rated "3" for all operating systems. For Linux, either patch is rated "3".

Patching flash is a bit more complex in that it is included with some browsers, in which case you will need to update the browser. For example Internet Explorer 11 and Chrome include Flash.

http://helpx.adobe.com/security/products/flash-player/apsb14-01.html

http://helpx.adobe.com/security/products/flash-player/apsb14-02.html

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

7 Comments

Microsoft Patch Tuesday January 2014

Overview of the January 2014 Microsoft patches and their status.

| # | Affected | Contra Indications - KB | Known Exploits | Microsoft rating(**) | ISC rating(*) | |

|---|---|---|---|---|---|---|

| clients | servers | |||||

| MS14-001 |

Code Remote Execution Vulnerability in Microsoft Word and Office Web apps (ReplacesMS13-072 MS13-084 MS13-086 MS13-100 ) |

|||||

|

Word and SharePoint / Office Web Apps components related to Word Docs. CVE-2014-0258 CVE-2014-0259 CVE-2014-0260 CVE-2014-0260 |

KB 2916605 | No. |

Severity:Important Exploitability: 1 |

Critical | Critical | |

| MS14-002 |

Privilege Escalation Vulnerabilities in Windows Kernel (ReplacesMS10-099 ) |

|||||

|

NDPROXY driver CVE-2013-5065 |

KB 2914368 | publicly disclosed and used in targeted attacks. |

Severity:Important Exploitability: 1 |

Important | Important | |

| MS14-003 |

Elevation of Privilege Vulnerability in Windows Kernel Mode Drivers (ReplacesMS13-101 ) |

|||||

|

win32k.sys Kernel Mode Driver CVE-2014-0262 |

KB 2913602 | No. |

Severity:Important Exploitability: 1 |

Important | Important | |

| MS14-004 |

Denial of Service Vulnerability in Microsoft Dynamics AX (Replaces ) |

|||||

|

Microsoft Dynamics AX CVE-2014-0261 |

KB 2880826 | No. |

Severity:Important Exploitability: 1 |

N/A | Important | |

We appreciate updates

US based customers can call Microsoft for free patch related support on 1-866-PCSAFETY

-

We use 4 levels:

- PATCH NOW: Typically used where we see immediate danger of exploitation. Typical environments will want to deploy these patches ASAP. Workarounds are typically not accepted by users or are not possible. This rating is often used when typical deployments make it vulnerable and exploits are being used or easy to obtain or make.

- Critical: Anything that needs little to become "interesting" for the dark side. Best approach is to test and deploy ASAP. Workarounds can give more time to test.

- Important: Things where more testing and other measures can help.

- Less Urgent: Typically we expect the impact if left unpatched to be not that big a deal in the short term. Do not forget them however.

- The difference between the client and server rating is based on how you use the affected machine. We take into account the typical client and server deployment in the usage of the machine and the common measures people typically have in place already. Measures we presume are simple best practices for servers such as not using outlook, MSIE, word etc. to do traditional office or leisure work.

- The rating is not a risk analysis as such. It is a rating of importance of the vulnerability and the perceived or even predicted threat for affected systems. The rating does not account for the number of affected systems there are. It is for an affected system in a typical worst-case role.

- Only the organization itself is in a position to do a full risk analysis involving the presence (or lack of) affected systems, the actually implemented measures, the impact on their operation and the value of the assets involved.

- All patches released by a vendor are important enough to have a close look if you use the affected systems. There is little incentive for vendors to publicize patches that do not have some form of risk to them.

(**): The exploitability rating we show is the worst of them all due to the too large number of ratings Microsoft assigns to some of the patches.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

2 Comments

Spamming and scanning botnets - is there something I can do to block them from my site?

Chris Mohan --- Internet Storm Center Handler on Duty

4 Comments

Got an IPv6 Firewall?

Just like the call "Winter is Coming" in Game of Thrones, we keep hearing IPv6 is coming to our networks spreading doom and gloom to our most priced assets. But just like the clothing worn by some of the actors of the TV show isn't exactly suited for winter, the network security infrastructure deployed currently wouldn't give you a hint that IPv6 is around the corner.

On the other hand, here are some recent numbers:

- Over 25% of Comcast customers are "actively provisioned with native dual stack broadband" (see comcast6.net)

- 40% of the Verizon Wireless network is using IPv6 as of December 2013 (http://www.worldipv6launch.org/measurements/)

- Between July and December last year, Akamai saw IPv6 traffic go up by about a factor of 5 (http://www.akamai.com/ipv6)

When I made our new "Quickscan" router scanning tool available last week, I left it IPv6 enabled. So it is no surprise, that I am getting e-mails like the following:

The results were "interesting"

...

A few weeks ago I had installed an IPv6 capable modem and updated my router config to enable IPv6. The results were glorious in that IPv6 ran like a charm.

The sober facts arose when I ran the ISC router scan - it used my IPv6 address, which hooked directly to my desktop (behind my firewall) and pulled up my generally unused native Apache service.

I went over my router config with a fine-tooth comb and realized that my router has no support for IPv6 filtering.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

IPv6 Security Training ( https://www.sans.org/sec546 )

Twitter

13 Comments

Notification Glitch - Multiple New Diary Notifications

We have been notified that some of you have received repeated notifications being sent out regarding a recently-published diary. Notification has been turned off while we are investigating the issue. We apologize about the inconvenience.

-----------

Guy Bruneau IPSS Inc. gbruneau at isc dot sans dot edu

1 Comments

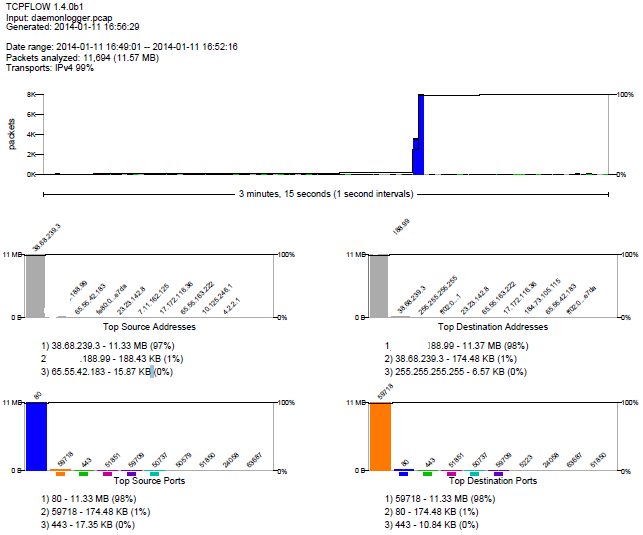

tcpflow 1.4.4 and some of its most Interesting Features

The latest version can of course reconstruct TCP flows but also has some interesting feature such as being able to carve files out of web traffic (zip, gif, jpg, css, etc) and reconstruct webpages. Another nice feature is the fact it provides a summary PDF report of the pcap file processed by tcpflow.

When enabling file reconstructions, the web output of the files are in the following format which differentiate them from the regular TCP flow reconstructed files. Their format ends with HTTPBODY-001.html, HTTPBODY-001.gif, HTTPBODY-001.css or HTTPBODY-001.zip to name a few.

A precompiled 32 and 64 bit version 1.4.0b1 is available for download here and contains all the same functionality the Unix version which can be downloaded here. This basic setup replays a pcap file and enables all the features use in tcpflow:

tcpflow -a -r -o tcpflow daemonlogger.pcap

-a: do ALL post-processing

-r file: read packets from tcpdump pcap file (may be repeated)

-o outdir : specify output directory (default '.')

[1] http://www.circlemud.org/jelson/software/tcpflow/

[2] https://github.com/simsong/tcpflow

[3] http://www.digitalcorpora.org/downloads/tcpflow/

-----------

Guy Bruneau IPSS Inc. gbruneau at isc dot sans dot edu

0 Comments

Cisco Small Business Devices backdoor fix

Cisco has released a new update fix to Cisco WAP4410N Wireless-N Access Point, Cisco WRVS4400N Wireless-N Gigabit Security Router, and the Cisco RVS4000 4-port Gigabit Security Router.

The vulnerability was an attacker can gain root-level access when he/she exploit a service listening on port 32764/tcp .

Here is the details from cisco website:

http://tools.cisco.com/security/center/content/CiscoSecurityAdvisory/cisco-sa-20140110-sbd

0 Comments

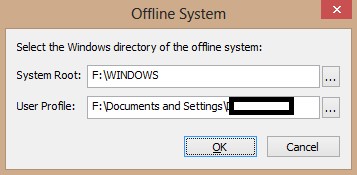

Windows Autorun-3

In previous diaries I talked about some of the most common startup locations in windows environment.

In this diary I will talk about some of the method to enumerate these values from registry

1-Autoruns

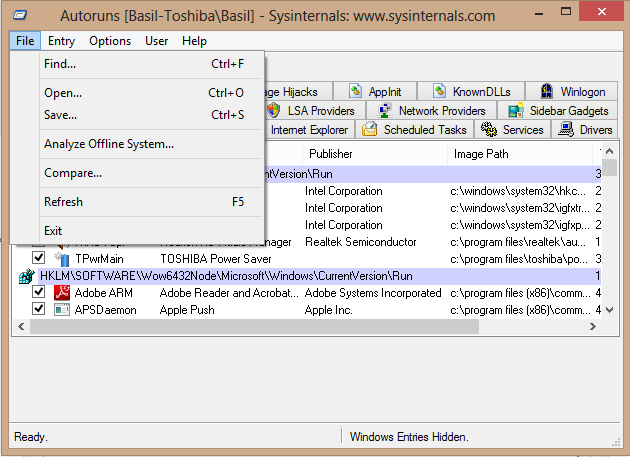

Sysinternals Autoruns is the best tool available to enumerate the startup locations; it can locate almost every startup location in Windows. If you are a big fan of command line or you need something scriptable, Autorunsc is the command line version of Autoruns . Autoruns can detect the startup locations for the current user and any other user using the same system.

In addition one of the most powerful features of Autoruns is the ability of analyzing offline systems ,this will be very useful if you have a binary image of a compromised system.

Here is how to use it with an offline system:

1-Mount the image

2-File->Analyze Offline System..

2-Provide System Root and User Profile Path

3-Click OK

2-Registry Editors/Viewers

In forensics world we cannot depend on one tool only, in many cases we have to double check the result of one tool using different tool.

In addition to the windows built-in tools (RegEdit, reg command and PowerShell Get-ChildItem/Get-ItemProperty) there are some great tools to analysis registry such as AccessData FTK Registry Viewer, Harlan Carvy RegRipper and TZWorks Yet Another Registry Utility (yaru).

One big advantage of yaru is the ability to recover deleted registry keys which is very useful when someone is trying to hide his track.

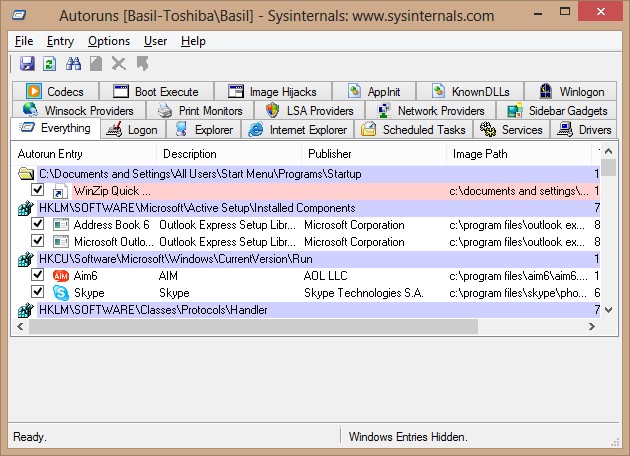

3-WMIC

Windows Management Instrument Command-line has its own way to retrieve the startup location.

|

Wmic startup list full |

2 Comments

Massive PHP RFI scans

Are you seeing same/similar requests on your web site too? Or maybe you managed to catch the bot on a compromised machine or a honeypot? Let us know!

8 Comments

Is XXE the new SQLi?

- We can probably DoS the application by reading from a file such as /dev/random or /dev/zero.

- We can port scan internal IP addresses, or at least try to find internal web servers.

6 Comments

Intercepted Email Attempts to Steal Payments

A reader sent in details of a incident that is currently being investigated in their environment. (Thank you Peter for sharing! ) It appears to be a slick yet elaborate scam to divert a customer payment to the scammers. It occurs when the scammer attempts to slip into an email conversation and go undetected in order to channel an ordinary payment for service or goods into his own coffers.

Here is a simple breakdown of the flow:

-

Supplier sends business email to customer, email mentions a payment has been received and asks when will next payment arrive.

-

Scammer intercepts and slightly alters the email.

-

The Customer receives the email seemingly from the Supplier but altered by the Scammer with the following text slipped into it:

"KIndly inform when payment shall be made so i can provide you with our offshore trading account as our account department has just informed us that our regular account is right now under audit and government taxation process as such we cant recieve funds through it our account dept shall be providing us with our offshore trading account for our transactions. Please inform asap so our account department shall provide our offshore trading account for your remittance."

-

Scammer sets up a fake domain name with similar look and feel. i.e. If the legitimate domain is google.us, then the fake one could be google-us.com.

-

An email is sent to the Customer from the fake domain indicating the new account info to channel the funds:

"Kindly note that our account department has just informed us that our regular account is right now under audit and government taxation process as such we can't receive funds through it. Our account department has provided us with our Turkey offshore trading account for our transactions. Kindly remit 30% down payment for invoice no. 936911 to our offshore trading account as below;

Bank name: Xxxxx Xxxx

Swift code:XXXXXXXX

Router: 123456

Account name: Xxx XXX Xx

IBAN:TR123456789012345678901234

Account number:1234567-123

Address: Xxxxxxxxx Xxx Xx xxx Xxxxxxxx xxxxx Xxxxxxxx, Xxxxxx"

-

The Customer is very security conscious and noticed the following red flags to avert the fraud:

- Email was sent at an odd time (off hour for the time zones in question)

- The domain addresses in spoofed email were incorrect. (ie. google-us.com vs. google.us)

- The email contained repeated text which added to the "spammy" feel of it.

This scam was averted by the security consciousness business staff and properly analyzed by talented tech staff. We appreciate them sharing it with us.

The flags that indicate this is elaborate, is the email appeared to be fully intercepted and targeted because of the mentioning of a payment was requested. Also, the fake domain that was created for this incident was created hours before the fraudulent email with the account information was sent. The technical analysis showed the fake domain email was sent from an IP not owned by the supplier or the customer.

This incident is still under investigation and we will provide more obfuscated details as they become available. Please comment and discuss with us if this has happened to your environment and what was done to mitigate and investigate things further.

-Kevin

--

ISC Handler on Duty

17 Comments

OpenSSL version 1.0.0l released

Openssl project has announced a new realse of openssl 1.0.01 open source toolkit for SSl/TLS.The new release has fixed several bugs as the following :

Major changes between OpenSSL 1.0.0k and OpenSSL 1.0.0l [6 Jan 2014]

- Fix for DTLS retransmission bug CVE-2013-6450

Major changes between OpenSSL 1.0.0j and OpenSSL 1.0.0k [5 Feb 2013]:

- Fix for SSL/TLS/DTLS CBC plaintext recovery attack CVE-2013-0169

- Fix OCSP bad key DoS attack CVE-2013-0166

Major changes between OpenSSL 1.0.0i and OpenSSL 1.0.0j [10 May 2012]:

- Fix DTLS record length checking bug CVE-2012-2333

Major changes between OpenSSL 1.0.0h and OpenSSL 1.0.0i [19 Apr 2012]:

- Fix for ASN1 overflow bug CVE-2012-2110

Major changes between OpenSSL 1.0.0g and OpenSSL 1.0.0h [12 Mar 2012]:

- Fix for CMS/PKCS#7 MMA CVE-2012-0884

- Corrected fix for CVE-2011-4619

- Various DTLS fixes.

Major changes between OpenSSL 1.0.0f and OpenSSL 1.0.0g [18 Jan 2012]:

- Fix for DTLS DoS issue CVE-2012-0050

Major changes between OpenSSL 1.0.0e and OpenSSL 1.0.0f [4 Jan 2012]:

- Fix for DTLS plaintext recovery attack CVE-2011-4108

- Clear block padding bytes of SSL 3.0 records CVE-2011-4576

- Only allow one SGC handshake restart for SSL/TLS CVE-2011-4619

- Check parameters are not NULL in GOST ENGINE CVE-2012-0027

Check for malformed RFC3779 data CVE-2011-4577

For more details :

http://www.openssl.org/news/openssl-1.0.0-notes.html

0 Comments

Incident response and the false sense of security

-

Running a hardened web server as reverse proxy to protect the actual application is a great idea, however if the actual web application also listens on publicly available TCP port, there is nothing to stop the attacker from going after the application directly, bypassing the proxy.

(If possible always bind the applications to localhost only or at least use the firewall to limit access to the application. This is how the attacker got a foothold on the system - known vulnerable web application and bypassing simple but efficient virtual patching done by the reverse proxy.) -

Hard-coded passwords and password reuse - as it turned out, all of the IT systems and components used the same administrator password. The original password could be found in a publicly readable backup script on a compromised server located in the DMZ.

(Backup process is one of the most sensitive elements of the system - should everything else fail, backup is all you have. If privilege separation was implemented and properly used the attacker wouldn’t get the administrator’s password. Finally there is no excuse for password reuse - password management applications are widely available and really easy to use. ) -

Centralised logging can be very useful, especially if it’s used with some kind of log monitoring solution. Unfortunately it can also create extra work if you try to review logs from the incident and notice large portion of the systems having their clocks off by minutes or hours.

(Keeping all your system clocks in sync is really important. NTP clients do the job very well and are already built into most if not all of the network equipment and general purpose operating systems. Another thing to keep in mind are time zones - make sure all systems use the same time zone and if possible pick one that doesn’t observe Daylight Saving Time (DST) as this has great potential to create additional issues or delays if the incident spans systems located in more than one country, especially if it happened around DST time change. Remember - simplicity is your friend.)

Some interesting DST facts:

- Different countries observe DST on different dates - for example in US, Mexico and most of Canada DST begins about two weeks earlier than European countries.

- China which spans five time zones uses only one time zone (GMT+8) and doesn’t observe DST.

- In Southern Hemisphere where seasons of the year are in opposite to the Northern Hemisphere, so is the DST - starting in late October and ending in late March.

- Many countries don’t observe DST at all.

7 Comments

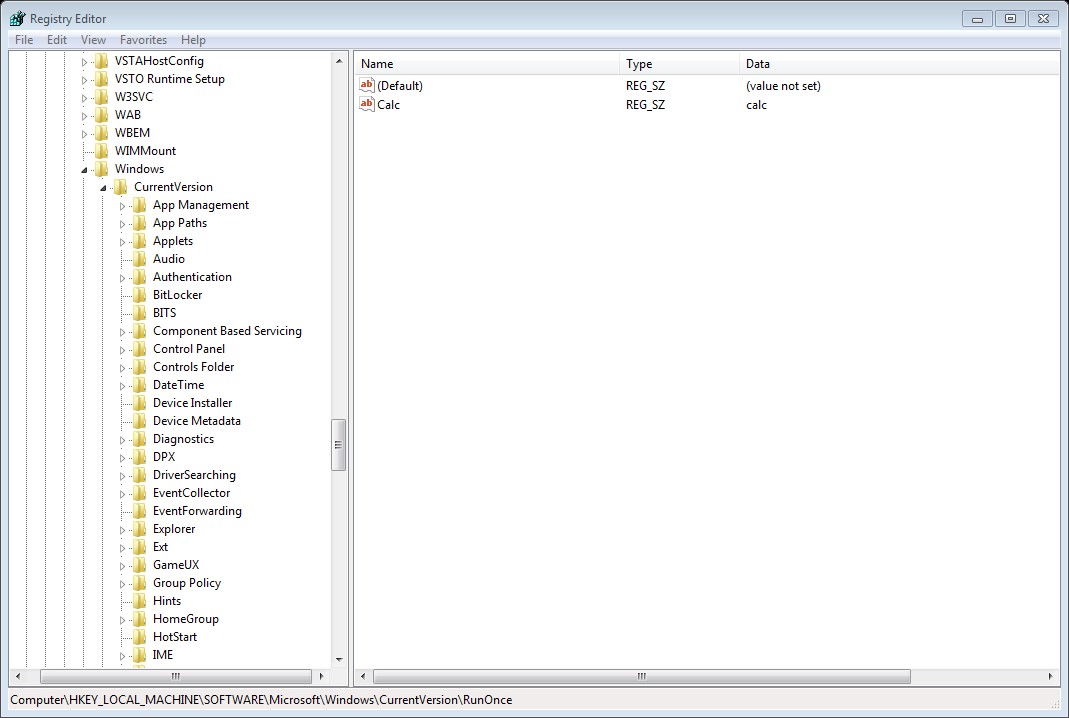

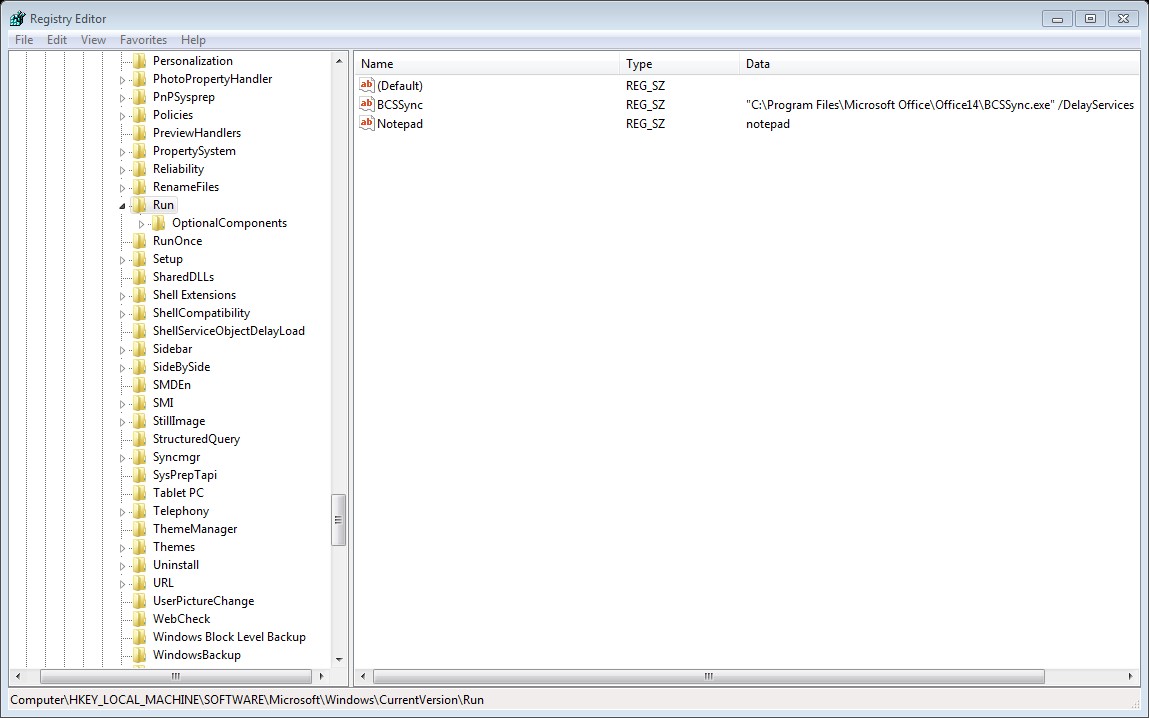

Windows Autorun Part-2

In previous diary I talked about startup folders and shell folders registry keys. In this diary I will continue talking about how to check if you are suspecting something malware or a compromised system.

2-Run and RunOnce registry key:

HKEY_LOCAL_MACHINE\Software\Microsoft\Windows\CurrentVersion\Run\

HKEY_LOCAL_MACHINE\Software\Microsoft\Windows\CurrentVersion\RunOnce\

Any executable in the above registry keys will start during the system startup, the different between Run and RunOnce is that RunOnce will run the value for one time then it will be deleted ,while Run it will run every time that the system startup.

HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\Run\

HKEY_CURRENT_USER\Software\Microsoft\Windows\CurrentVersion\RunOnce\

The above keys is related to specific user login, again the different between Run and RunOnce is RunOnce will run one time the the value will be deleted while run will be run every time that the specific user log on.

3- Services

HKEY_LOCAL_MACHINE\System\CurrentControlSet\Services

Here you can find the list of services that run at system startup, each service has a startup value as the following table:

|

Value |

Startup Type |

|

2 |

Automatic |

|

3 |

Manual |

|

4 |

Disabled |

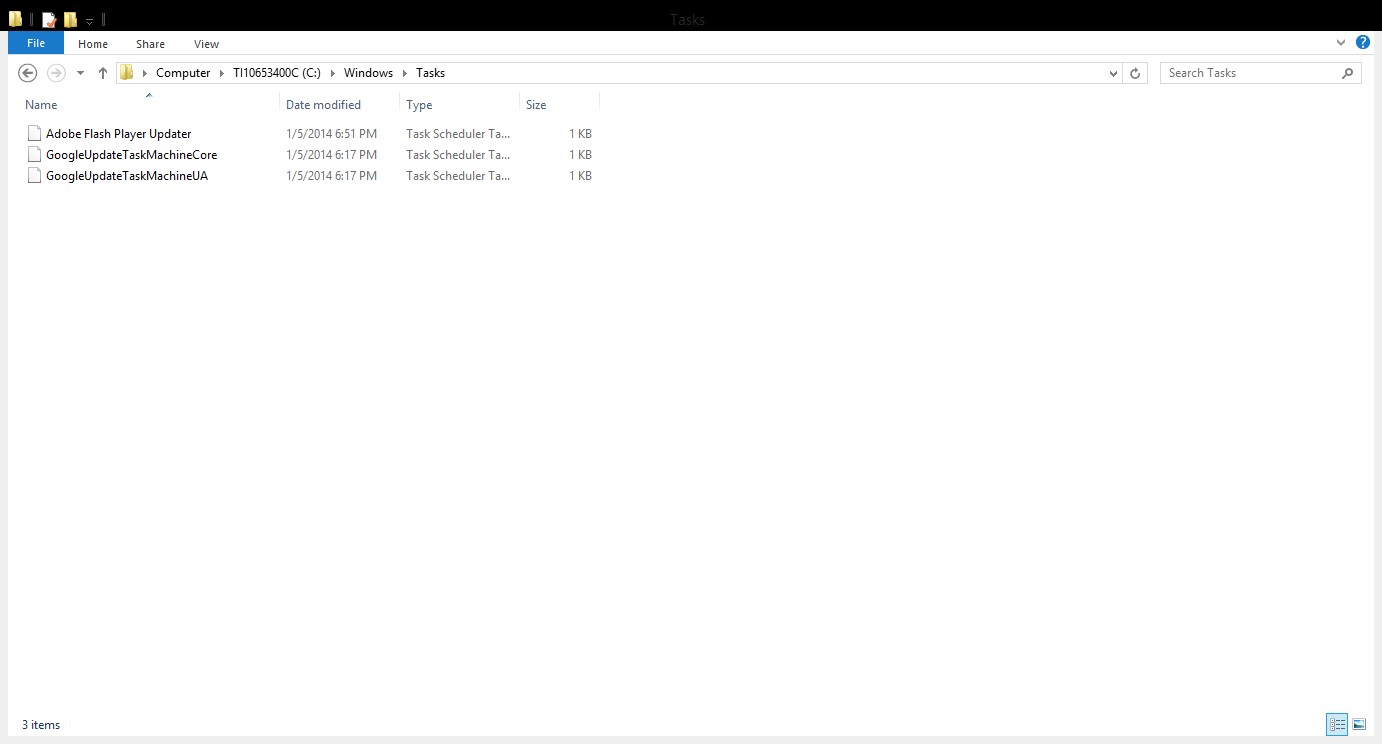

4-Schedule tasks:

Schedule task can be used to run a executable based on a schedule .The task are located in %windir%/tasks folder,of course attacker and malware will not use task name such as ‘I am malicious’ instead it will use some names that sound legitimate such ‘Windows Update’ .

8 Comments

Malicious Ads from Yahoo

According to a blog post from fox-it.com, they found ads.yahoo.com serving malicious ads from Yahoo's home page as early as December 30th. The malicious traffic appeared to come from the following subnets 192.133.137.0/24 and 193.169.245.0/24. Most infections seem to be in Europe. Yahoo appears to be aware and addressing the issue, according to the blog.

Has anyone else seen this?

--

Tom Webb

5 Comments

Monitoring Windows Networks Using Syslog (Part One)

As an incident responder, I love high value logs. We all know Windows event logs can be super chatty, but with the right tuning they can be very useful. I’ve tried out several utilties for sysloging Windows event logs, but I’ve found event-to-syslog (code.google.com/p/eventlog-to-syslog) to be my favorite due to the simple config and install.

If you are not logging anything from your Windows clients and you suddenly turn on everything, you will be overwhelmed. I’m going to cover a couple of logs to start looking at in this post and go into more detail on my next post. AppLocker, EMET (http://support.microsoft.com/kb/2458544/en-US) ,Windows Defender and application error logs are some of the most valuable logs when looking for compromised systems. These are what we are going to cover today.

AppLocker Setup

If you haven’t set up AppLocker in your environment, now would be a great time to get started. Microsoft has a great document that covers it in complete detail (

http://download.microsoft.com/download/B/F/0/BF0FC8F8-178E-4866-BBC3-178884A09E18/AppLocker-Design-Guide.pdf) For most, using the Path Rules will get you what you need. The pros and cons of each ruleset are covered in section 2.4.4 pg.17-22.

The MS doc is quite extensive, but for a quick start guide try the NCSC Guide (http://ncsc.govt.nz/sites/default/files/articles/NCSC%20Applocker-public%20v1.0.5.pdf)

The basic idea of the path rules is to allow things to run from normal folders (e.g. Program Files and Windows Folders) and block everything else. The NSA SRP guide (YEA YEA, I know) gives a good list of rules to use with Applocker (www.nsa.gov/ia/_files/os/win2k/application_whitelisting_using_srp.pdf). You will run into some issues with Chrome and other apps (Spotify) that run from the users AppData folder,but that is where the syslog auditing comes into play. First deploy this in audit mode and then once you are comfortable, move to prevent mode. If you already have a software inventory product, you will be able to leverage that information to feed into your policy. Much has been written about this, but I wanted to cover the basics.

EventLog-to-Syslog Installation

Download the software from (https://code.google.com/p/eventlog-to-syslog/)

1. To install it as a service its simple run:

c:>evtsys.exe -i -h <Syslog Server IP>

2. Copy the evtsys.cfg to C:\windows\system32\ directory. (More on this below)

3. Restart the service.

c:>Net stop evtsys

c:>Net start evtsys

Thats it, you should be ready to get logs.

Evtsys.cfg Setup

A basic version of the evtsys.cfg can be found on my Github (http://goo.gl/79spGK). This config file is for Windows 7 and Up. Please rename the file to Evtsys.cfg before using. This file uses XPATH for the filters, which makes creating new ones easy. Here is a quick way to create your own.

1. In the Windows Event Viewer, select the Event logs you wish to create a rule from.

2. Click the Details Tab and Select XML View.

3. Determine the Channel for the Event along with any specific Event ID you want from that channel.

In this case the Windows Defender Channel is:

<Channel>Microsoft-Windows-Windows Defender/Operational</Channel>

The event ID’s we want are: 1005,1006,1010,1012,1014,2001,2003, 2004, 3002,5008

4. Putting it all together. The format for the rules are:XPath:<PathtoChannel>:<Select statement> and the rule must be on one line. In the channel name it’s ok to have spaces, but the Select statement has to have double quotes.

5. Click the Filter Current Log Button on the side of the Event View and enter the additional data you want to use to filter. Then Click on the XML tab at the top. You can cut and paste the entire <SELECT PATH portion into your filter.

XPath:Microsoft-Windows-Windows Defender/Operational:<Select Path=”Microsoft-Windows-Windows Defender/Operational\”>*[System[(EventID=1005 or EventID=1006 or EventID=1010 or EventID=1012 or EventID=1014 or EventID=2001 or EventID=2003 or EventID=2004 or EventID=3002 or EventID=5008)]]</Select>

Other Items that will be syslogged are:

-

Application Crashes

-

Emet

-

Windows Defender

-

Account Lockouts

-

User Added to Privileged Group

Finished Product

The raw syslog for a blocked AppLocker log looks like below.

Jan 3 12:59:35 WIN-C AppLocker: 8004: %OSDRIVE%\TEMP\bob\X64\AGENT.EXE was prevented from running.

Raw syslog for allowed programs.

Jan 3 14:37:51 WIN-CC AppLocker: 8002: %SYSTEM32%\SEARCHPROTOCOLHOST.EXE was allowed to run.

Simple Stats

To get a list of all applications that have been blocked, use the following command:

$cat /var/log/syslog |fgrep AppLocker |fgrep prevent|awk ‘{print $7}’ |sort|uniq -c

1 %OSDRIVE%\TEMP\bob\X64\AGENT.EXE

Next Time on ISC..

In the next post I’ll cover more comprehensive config file to detect attackers and integrate logs for reporting.

--

Tom Webb

5 Comments

Scans Increase for New Linksys Backdoor (32764/TCP)

We do see a lot of probes for port 32764/TCP . According to a post to github from 2 days ago, some Linksys devices may be listening on this port enabling full unauthenticated admin access. [1]

At this point, I urge everybody to scan their networks for devices listening on port 32764/TCP. If you use a Linksys router, try to scan its public IP address from outside your network.

Our data shows almost no scans to the port prior to today, but a large number from 3 source IPs today. The by far largest number of scans come from 80.82.78.9. ShodanHQ has also been actively probing this port for the last couple of days.

https://isc.sans.edu/portascii.html?port=32764&start=2013-12-03&end=2014-01-02

| Date | Records | Targets | Sources | TCP/UDP*100 |

| Dec 5th | 10 | 2 | 3 | 90 |

| Dec 9th | 11 | 2 | 5 | 100 |

| Dec 10th | 17 | 5 | 6 | 100 |

| Jan 2nd | 15068 | 3833 | 3 | 100 |

We only have 10 different source IP addresses originating more then 10 port 32764 scans per day over the last 30 days:

+------------+-----------------+----------+ | date | source | count(*) | +------------+-----------------+----------+ | 2014-01-02 | 080.082.078.009 | 18392 | | 2014-01-01 | 198.020.069.074 | 768 |<-- interesting... 3 days | 2014-01-02 | 198.020.069.074 | 585 |<-- early hits from ShodanHQ | 2014-01-02 | 178.079.136.162 | 226 | | 2013-12-31 | 198.020.069.074 | 102 |<-- | 2014-01-02 | 072.182.101.054 | 74 | +------------+-----------------+----------+

[1] https://github.com/elvanderb/TCP-32764

-----

Johannes B. Ullrich, Ph.D.

SANS Technology Institute

Twitter

5 Comments

OpenSSL.org Defaced by Attackers Gaining Access to Hypervisor

By now, most of you have heard that the openssl.org website was defaced. While the source code and repositories were not tampered with, this obviously concerned people. What is more interesting is that the attack was made possible by gaining access to the hypervisor that hosts the VM responsible for the website. Attacks of this sort are likely to be more common as time goes on as it provides easy ability to take over a host without having to go through the effort of actually rooting a box. (Social engineering credentials is easy, ask the Syrian Electronic Army... actual penetrations take effort).

The key takeaways are to obviously protect the Hypervisor from unauthorized access. Beyond that, protect your VMs as if they are physical machines and as feasible use a BIOS password, boot password, disable DVDROM and USB storage. Don't trust the hypervisor or VM host to secure your machine for you. For additional reading, see this NIST Guide to Security for Full Virtualization Technologies.

More on the openssl.org defacement as it develops.

--

John Bambenek

bambenek \at\ gmail /dot/ com

Bambenek Consulting

0 Comments

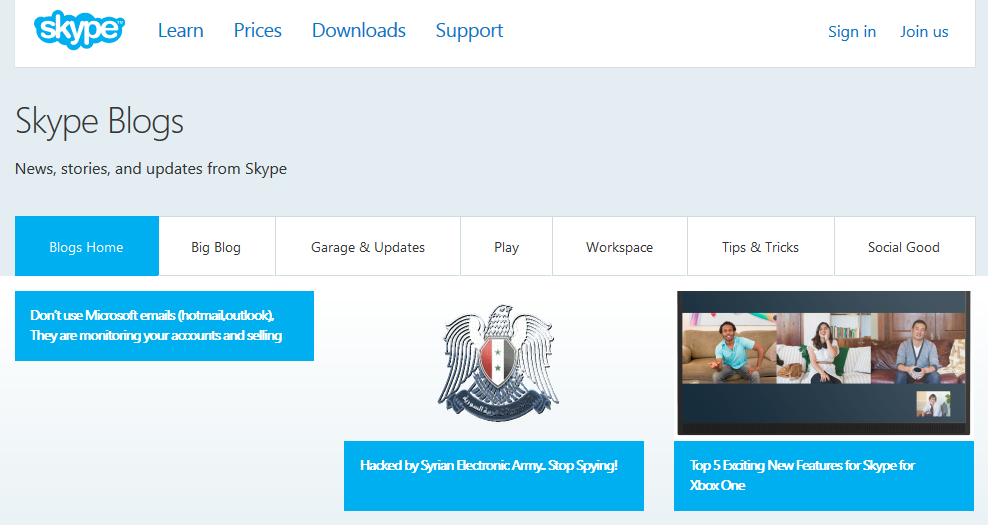

Happy New Year from the Syrian Electronic Army - Skype’s Social Media Accounts Hacked

UPDATE 1500 PDT 01 JAN: Skype Blogs now recovered and reverted to normal. Be sure to add all available protection to your social media accounts and don't use one password to access them all.

The Syrian Electronic Army (SEA) has compromised Skype’s blog and posted anti-NSA and anti-Microsoft messages with such joyful tidbits as "Don’t use Microsoft emails (hotmail,outlook), They are monitoring your accounts and selling the data to the governments."

SEA also gained control of Skype’s Facebook and Twitter accounts although messages posted have since been removed.

Follow all the fun on Twitter.

0 Comments

Six degrees of celebration: Juniper, ANT, Shodan, Maltego, Cisco, and Tails

Happy New Year! Hope 2014 is a great year for you.

Ok, so I'm stretching a bit here on the six degrees but its a chance to tie a few interesting pieces of news together for you as we celebrate the new year.

1) As reported earlier by John, Juniper had an issue with its Juniper SSL VPN specifiv to a UAC Host Checker issue.

KB article on the issue: https://kb.juniper.net/TSB16290

Software fix: http://www.juniper.net/support/downloads/?p=esap

2) The latest bit of news regarding the NSA includes the ANT group for the Tailored Access Operations unit. Their tactics revealed in the Der Spiegel article include malware for Juniper and Cisco firewalls such as Jetplow, a "firmware persistence implant" for taking over Cisco PIX and ASA firewalls.

3) The Shodan blog announced a facelift for the Shodan add-on for Maltego and its relaunch on https://maltego.shodan.io.

4) The Shodan add-on for Maltego (Shodan API key required) is really useful for conducting transforms to search Shodan for the likes of Juniper and Cisco firewalls.

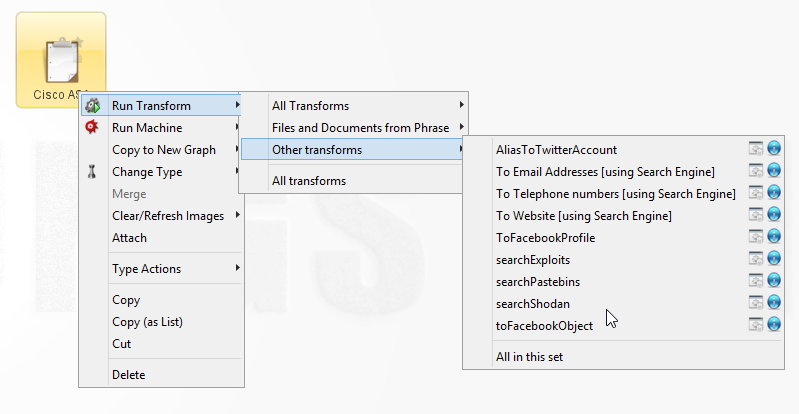

As an example, you can conduct a searchShodan transform on the phrase Cisco ASA as seen in Figure 1.

Figure 1

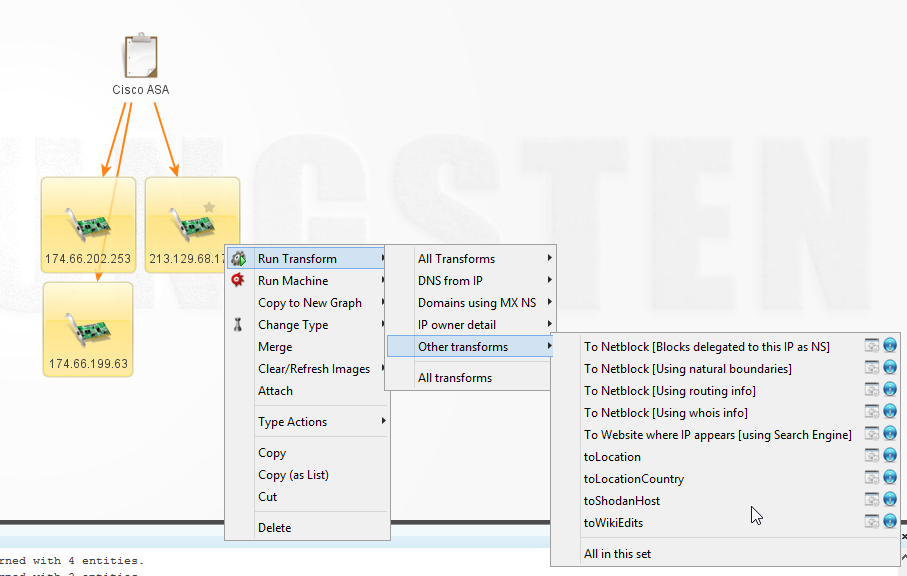

You could then conduct a toShodanHost transform on the results generated by searchShodan, as seen in Figure 2.

Figure 2

Your results would then likely appear as seen in Figure 3.

.png)

Figure 3

5) Cisco says they're very concerned over the NSA allegations and have posted a reply via Cisco Security Response as well as additional comments from John Stewart.

6) Many readers are also concerned about their privacy as a result of all the NSA disclosures and allegations. To aid in attempting improved privacy, I've posted my latest toolsmith on Tails: The Amnesiac Incognito Live System, privacy for anyone anywhere.

So how all that ties together in six little steps? :-)

With that, good reader, I again bid you and yours a happy new year and best wishes in 2014.

3 Comments

18 Comments