Stuff I Learned Decrypting

With the prevalence of Next-Gen Firewalls, we're seeing a new wave of organizations decrypting traffic at the network edge, between organizations and the public internet.

This is a good thing. As we see more and more "legit" https traffic, we're also seeing the attackers follow that trend, where malware and attacks are now often encrypted using standards based protocols as well.

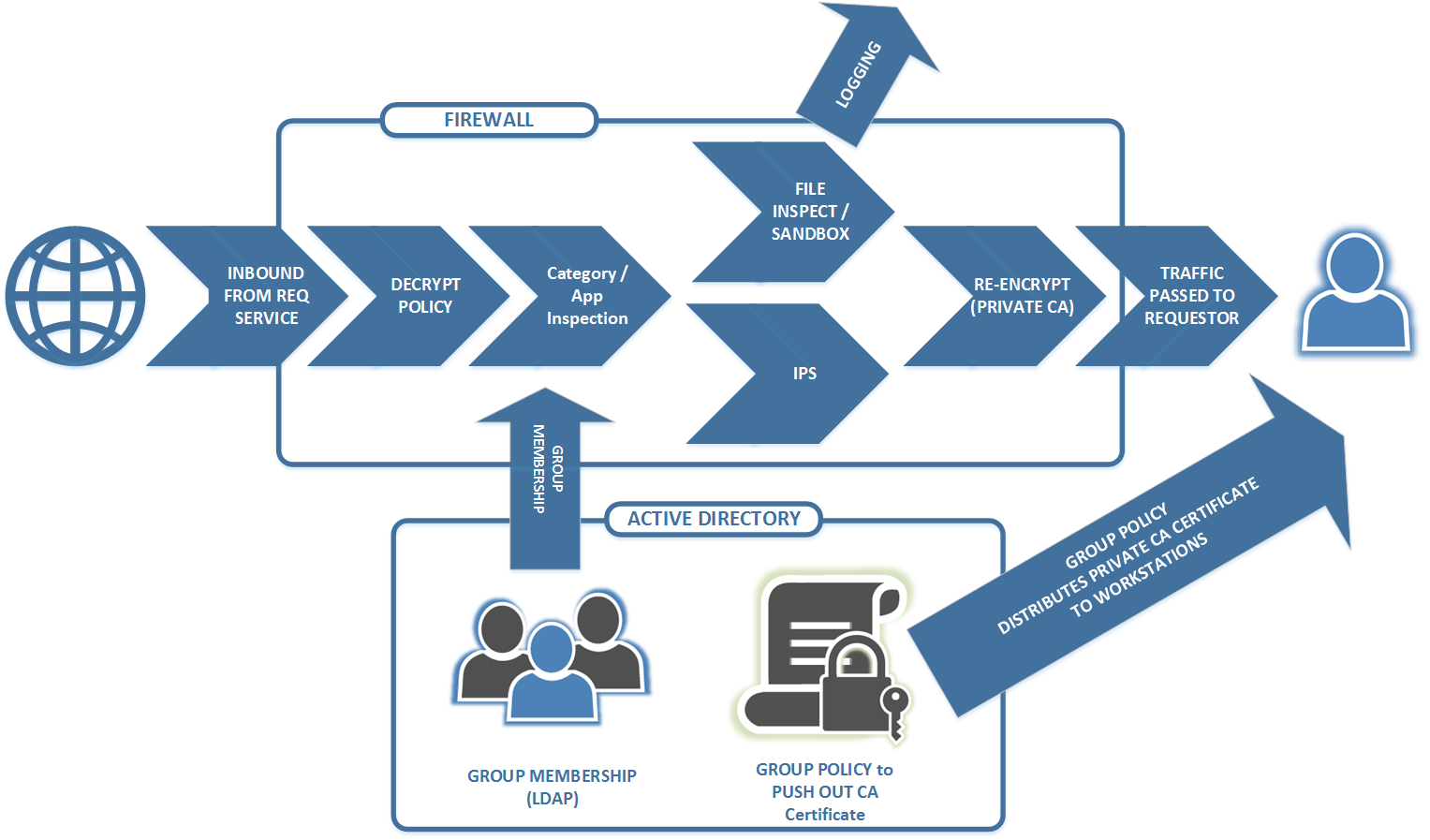

How does this look in practice? Usually something like this:

But as we do the "decrypt / inspect / encrypt" thing, we're also being forced to deal with applications that do things in unexpected ways - stuff like ...

What NOT to Decrypt

There is *always* a list of applications that your don't want to decrypt. For privacy reasons, you almost never decrypt healthcare sites and banking/financial sites. Not only is the case that we don't want that information in a log someplace, it's likely either illegal or breaks compliance if we decrypt and inspect tha ttraffic. Depending on your jurisdiction and industry, that list may of course vary.

Apps that run a Private CA and/or do Certificate Pinning

I've seen some banking terminals (both ATMs and POS platforms) do certificate pinning, which is exactly as you would hope should be going on. Of course they'll run their own CA, and reject any traffic that is encrypted using a certificate that isn't their own. And of course, we simply put that banking terminal (ATM or payment card processor) into an exemption list - we don't *want* to decrypt that traffic - if we could decrypt that traffic successfully and still have it operate, we'd report that as an issue to the payment processor. Where possible though, it's also a good practice to isolate these units, and only allow them to communicate to their payment card "mothership". You definitely don't want to be the source of an ATM breach, and if they get compromised from some other source, you don't want their attacker to see your network either.

Surprisingly though, I'm seeing the same sort of behaviour in Courier apps. You know, that desktop app that shipping & receiving (or the office manager, or whoever does shipping in your organisation) runs? Again, because the private CA is pinned, the only way to deal with it is to exempt the traffic between that workstation and the DN (Directory Name) of the target (*.purolator.com in this case). Good on them for going the extra mile, but it sure was a surprise to find it in the logs! We still get to decrypt everything else to and from those machines - they're not isolated, but exempting traffic for that one application is straightforward.

Apps that "Break the Rules"

A more troubling situation is that we do see some applications that break or bend the rules for good encryption. For instance, apps that accept expired certificates from their server.

Logmein (the support/remote control application) for instance is currently in that situation. Their web services back-end currently uses an expired certificate. Worse yet, their upstream has no fixed IP address (it's in a CDN), and the traffic is over tcp/443. Without putting some work into the rules, the decryption engine will simply drop the traffic because the cert is expired. So what this means for perimeter rules is that we need to match on the target DN (there's a list), and for those DN's we have to allow expired certificates. And right below that rule, we need a second rule that has the same DN's but doesn't allow expired certificates (for when they fix this situation).

We see similar things for applications that use API's inside of SSL or TLS - if they're using weaker ciphers, older protocols or pin certificates, to allow that app to work we need to set the allowed DN's and permit whatever bad habit that app has.

Other Bad Habits:

We also see lots of file downloads happen for one application or another. So if your organization blocks downloading JAR files for instance, any Java based app that pulls a JAR will trigger and block, breaking the application. It's very common for instance to see "all-in-one" helpdesk applications download a package of EXE's, JAR's and MSI's when a new station is enrolled. As a side note, it's also common to see these apps pull down older versions of Java, Acrobat Reader and other apps - what could possibly go wrong with that?

Logging is your friend for all of these "something is broken" situations - for any application that doesn't work, have a test user break it "on film" and note the time. Once you've got it logged, you'll have the info you need to allow that application (and only that application) to do whatever dumb thing (or smart thing) that it needs to do to run, without allowing that behaviour on other applications.

For the helpdesk app example - in some cases we luck out and can set up a local repository, in other cases that "cloudy" nature of the app can't be worked around, and we need to permit exemptions.

Final advice? Once you have a smarter perimeter appliance like this in play, be prepared for your users to request that you "whitelist" a never-ending list of websites and applications. Before you do that, make sure that they're actually blocked for one behaviour or another - in most cases they work just fine. I'm still working out why human nature pushes folks to want a mile-long whitelist rule - one that has a "pass everything, trust it all" permission. So far, for most of those I leave all the decryption, traffic inspection, IPS and file inspection/sandboxing in play - the list of sites where you *don't* want that going on is pretty short.

If you've gone down the "decrypt/inspect/re-encrypt" road at your network edge, what problems have your found? How did you work around it? Please, use our comment form and let us (and everyone else) know!

===============

Rob VandenBrink

Compugen

Comments

Anonymous

Feb 13th 2017

8 years ago

Came here to mention exactly this. +1

Anonymous

Feb 13th 2017

8 years ago

Anonymous

Feb 13th 2017

8 years ago

I would change your drawing that the packets have to pass the FW Policy first. If they are not allowed then they will be dropped and not inspected.

Regards,

CJ

Anonymous

Feb 13th 2017

8 years ago

Anonymous

Feb 13th 2017

8 years ago

-BD

Anonymous

Feb 13th 2017

8 years ago

For instance, in a Cisco ASA / Firepower build, the ASA might block a packet before it ever goes to the SFR module. However, once it's in the SFR module it'll likely hit decrypt first.

On a Cisco FTD or Palo Alto, things really could go either way depending on how you build the firewall rules and the associated policies.

Other similar products will of course all have their own approaches - for the most part you can control which traffic hits which policy, but not always what the order or operations is.

Anonymous

Feb 13th 2017

8 years ago

That advice can seriously lead someone astray if they're just starting out. While in theory it sounds great in practice it's all in the details. We've been doing this for six years at the US bank I work for. Remember, just because it's decrypted does not mean it's accessible to a person. It's being decrypted on the fly and matched by inspection against a rule or policy set. The only times actual details are logged are if some traffic fires a rule. (Or some malicious administrator has decided to target someone specifically.)

Where it all falls apart is if you take the usual vendor advice to "exempt financial services, healthcare and government sites". Vendors have incredibly wide categories. Within "financial services" you won't find just large, reputable banks. You'll probably find car title loan companies, payday lending sites, etc. Within "government" you won't find just the IRS and Social Security Administration. You'll find the local library, the smallest county site still using unsigned Java document readers, etc. Healthcare gets you the single doctor and dentist office running on outdated Wordpress but who had HTTPS turned on by the hosting site as well as the major hospitals. (And don't think that a major organization is exempt from problems like these. They usually have so much decentralization and application sprawl that they have sites on their primary domain that are just as bad.)

It's far more effective to look at your logs after six months or so and bypass the companies and sites that your people actually use rather than exempt entire categories of sites.

Anonymous

Feb 14th 2017

8 years ago

The biggest part of why organisations exempt financial services from decryption is privacy and non-repudiation. The IT group simply cannot have possession of employee's online banking passwords (or other financial credentials), and it really doesn't matter what the size or reputation of their financial services org might be. Having that information available exposes the organisation to legal action for every "where did that withdrawal come from" moment that an employee might have.

On healthcare, it's absolutely about privacy. IT simply cannot know what health issue that employees might be researching or what healthcare sites they might visit - that critical search term might indicate a health issue, or a health issue of a family member or friend, or just idle interest but the logs can't make that differentiation and honestly it just plain doesn't matter - IT isn't allowed to have that info (and in lots of cases it's not HR's business either)

Typically these are two categories where the legal needs of the organisation trump the security risks, and in almost all cases rightly so.

Anonymous

Feb 14th 2017

8 years ago

Anonymous

Feb 14th 2017

8 years ago