[Guest Diary] Learning by doing: Iterative adventures in troubleshooting

by Preston Fitzgerald, SANS BACS Student (Version: 1)

[This is a Guest Diary by Preston Fitzgerald, an ISC intern as part of the SANS.edu Bachelor's Degree in Applied Cybersecurity (BACS) program [1].

A DShield honeypot [2] running on a raspberry Pi can sometimes be a bit of a fickle thing. While some may enjoy a ‘set it and forget it’ experience, I’ve found that my device sometimes requires a bit of babysitting. The latest example of this popped up when I connected to the device to inspect logs last week. What began as curiosity about failed log parsing led to learning about the DShield updater.

Background

The honeypot collects various potentially interesting artifacts for analysis. One source of this data is the webhoneypot.json file /srv/db/webhoneypot.json. Using jq, we can quickly parse this JSON for information about what remote hosts we’ve seen or what user agents they presented when they made requests to the honeypot:

jq '.headers | .host' webhoneypot.json | sort | uniq > ~/ops/hosts-DD-MM

jq .useragent webhoneypot.json | sort | uniq > ~/ops/agents-DD-MM

Part of the experience of monitoring a system like this is finding what feels good to you and what practices help you produce results. Some handlers will work to implement automation to identify interesting artifacts. Some will hunt and peck around until something catches their eye. One thing I’ve found works for me is periodically dumping information like this to file.

Roadblock ahead

What happened last week? My trusty tool jq returned a cry for help:

parse error: Invalid numeric literal at line 1, column 25165826

honeypot.json is malformed in some way. To make matters worse, the Pi was periodically crashing. It’s difficult to get any meaningful work done when your SSH sessions have a lifespan of 2 minutes. I decided to pull the card so I could inspect it directly, removing the Pi itself from the equation.

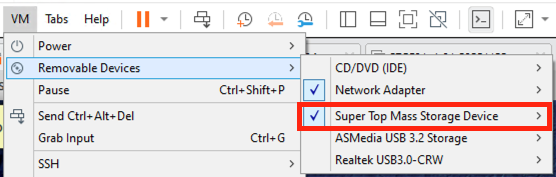

I plugged my card reader into a lab machine and instructed VMware to assign ownership of the device to a Slingshot[3] VM.

Figure 1: VMware Workstation can treat a device connected physically to the host as if it were connected directly to the guest Virtual Machine. This feature is accessible in the VM -> Removable Devices menu. This card reader is labeled Super Top Mass Storage Device

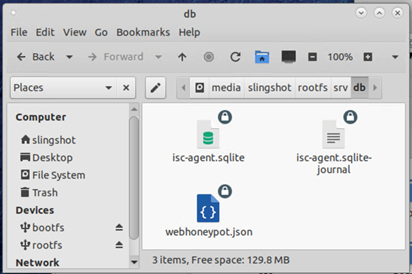

With the Pi’s storage now mounted to the VM, it’s possible to navigate to the offending file and inspect it.

Figure 2: Screenshot showing the mounted file system 'rootfs' and the contents of /srv/db including webhoneypot.json

Notably, this file occupies nearly half a gigabyte of the card’s limited storage space. That’s a big text file!

To the toolbox

Where did jq encounter its parsing error again?

parse error: Invalid numeric literal at line 1, column 25165826

It doesn’t like the 25,165,826th character (out of over 450 million total characters). How do we find out what’s in that position? What tools can we use? Another interesting quality of the file is that it consists of one single line. Go-to tools like head and tail are rendered less useful due to the single-line format. Though the file size is within vim’s documented maximum, I found that it struggled to open this file in my virtualized environment.

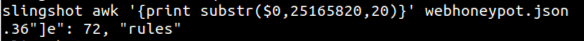

Thankfully awk’s substring feature will allow us to pluck out just the part of the file we are interested in working with.

Figure 3: Screenshot showing awk’s substring feature. Syntax: substr(string, starting index, length) here we are starting on character 25165820 and grabbing the following 20 characters.

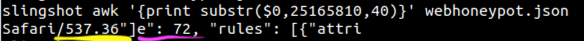

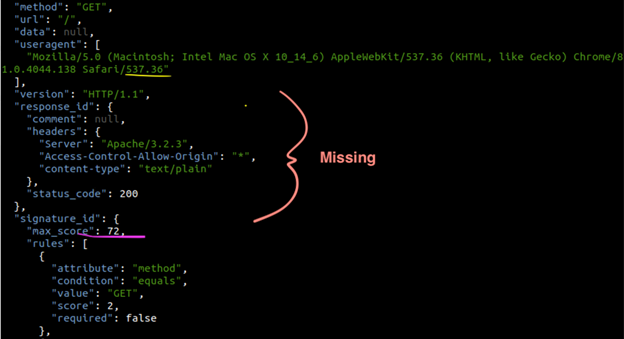

We can begin to see what’s going wrong here by manipulating the starting index and length of the substring and comparing it to prettified JSON that jq is able to output from an earlier entry before it encounters its parsing error:

Figure 4: Screenshot showing expanded substring to get more context before and after the parsing error

Figure 5: Screenshot showing sample JSON structure from part of the document before the parsing error. Here we can see the end of the user agent string underlined in yellow, and the e": 72 part underlined in pink. Comparing the output of awk to the JSON structure we can see just how much information is missing between the two.

The JSON structure has been destroyed because the text is truncated between the user agent and part of the signature_id object. Unfortunately, patching up the structure here and re-running jq revealed that there are many such holes in the data. It didn’t take more than a couple of these mending and jq processing cycles to realize that it was futile to try sewing it all back together.

Pivot

So far, we’ve discovered that we have one very large, corrupted log file. It is too damaged to try to piece back together manually and the structure is too damaged to parse it as JSON. How can we salvage the useful artifacts within?

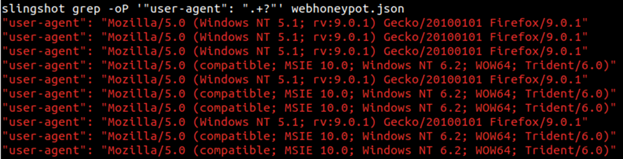

Let’s forget for a moment that this is meant to be JSON and treat it as any other text. By default, grep will return every line that matches the expression. We can use the -o option to return only the matched part of the data. This is useful because otherwise it would return the entire file on any match.

Return user agents:

Figure 6: Screenshot showing grep output matching the user-agent field in webhoneypot.json

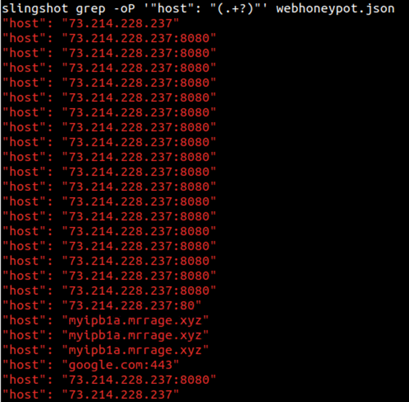

Return hosts:

Figure 7: Screenshot showing grep output matching the IP addresses and ports in webhoneypot.json

There are almost always options and many pathways that lead to success. By reaching for another tool, we can get the information we were looking for.

So what about the Pi?

Let’s quantify my observation that the device was crashing often. Just how often did this happen? I started by copying /var/log/messages from the card over to my local disk.

How much data are we working with? Let’s get a line count:

wc -l messages

This returned over 450,000 messages. That’s good news, we have some information to inspect. How many of those are startup messages?

grep -i booting messages | wc -l

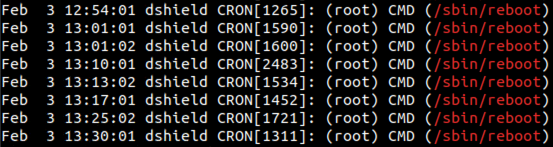

1,371 matches for boot messages. After checking the start and end date of the log, that’s roughly 200 reboots a day on average. Let’s see if syslog has additional details for us. After searching for messages related to initial startup and grabbing lines that appear before those messages, I was able to spot something. The log contains many mentions of reboot.

grep -i /sbin/reboot syslog

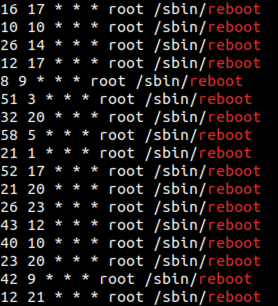

Figure 8: Screenshot showing a sample of the grep results for references to /sbin/reboot in syslog

How surprising! The Pi wasn’t crashing, it was being rebooted intentionally by a cron job. I copied the DShield cron located in /etc/cron.d/dshield and took a look at its contents.

grep -i /sbin/reboot dshield

Figure 9: A screenshot showing a number of cron jobs that trigger a reboot

Correction, it’s not a cron job—it’s several. Something has created 216 cron entries to reboot the Pi (this number is familiar, remember our ~200 reboots per day observation from the logs?). What could have made these entries? Let’s search the entire filesystem of the Pi for references to /sbin/reboot

grep -r ?/sbin/reboot? .

Figure 10: A screenshot showing the line in install.sh that appends reboot commands to /etc/cron.d/dshield.

The DShield installer itself creates these entries. I have version 93 which appears to be one version behind the current installer available on GitHub. The installer works by picking random time offsets. Because the firewall log parser uploads the sensor data before rebooting, the intent here is to spread the load so there isn’t a large spike of incoming sensor data at any one time throughout the day. So why would install.sh be running multiple times? You only run it once when you first install the sensor.

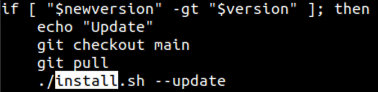

The answer is automatic updates. When you initially configure DShield you can enable automatic updates. The update script checks your current version and compares it to the latest version available on GitHub. Remember that my installed version was one behind current.

Figure 11: The updater compares the current DShield version to the latest available. If it sees that we are behind, it checks out the main branch of the repository and performs git pull to download the latest code before running the installer.

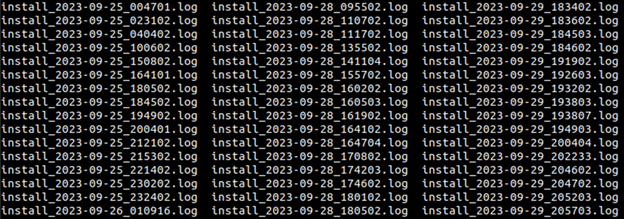

Inspecting /srv/log for install logs, it’s clear that the installer is running hundreds of times per day.

Figure 12: A screenshot showing a directory listing of /srv/log where we see timestamped logs indicating the installer was running many times per day.

So what happened?

Knowing for certain which problem happened first will require further log review, but my current hypothesis is this: At some point, the updater started the installer. The installer created a cron job to kick off a reboot but the random time set for the task happened to be very near the current time. The installer was unable to finish its work before the Pi shut itself down. This is supported by the fact that some of the install logs I reviewed seem to end abruptly before the final messages are printed out.

There are several errors found throughout these logs, including missing certificates, failed package update steps, locked log files, and ‘expected programs not found in PATH’. I believe some combination of these problems caused the installer to fail each time it was ran by the updater, resulting in a loop.

Remediation

Re-imaging the Pi will be the simplest way to get us out of this situation, but how could the problem be remediated in the long run?

Regardless of the initial cause of this problem, one potential fix for the issue of redundant cron jobs is this:

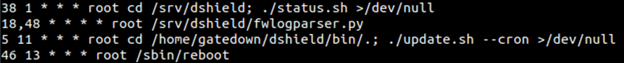

Before appending the cron job with the reboot and upload tasks, the installer could delete /etc/cron.d/dshield. It appears this file only has three unique entries:

Figure 13: A screenshot showing the four unique cron jobs used by DShield. We only need these entries, and not the hundreds of redundant lines.

By deleting the file and creating each of these jobs fresh when the installer runs, we can eliminate the risk of creating an 864-line cron configuration file.

It may also be advantageous to move this part of the installer script to the end of the process to eliminate the risk of the reboot firing before the script completes.

Most computer-inclined folks like their processes, their patterns, their Standard Operating Procedures. It’s important that we remain curious and nimble. Sometimes discovering something interesting or useful is the direct result of asking ourselves ‘why’ when something doesn’t seem right. Having the option to throw away a VM or revert it to snapshot is great. Throwing away a docker container that isn’t working the way we expect is convenient. Re-imaging a Pi to get it back to a known-good state helps us jump right back into our work. However, there can be real educational benefit when we slow down and perform troubleshooting on these things we generally view as ephemeral and when we share what we have learned, this can lead to improvements from which we all benefit.

[1] https://www.sans.edu/cyber-security-programs/bachelors-degree/

[2] DShield: https://github.com/DShield-ISC/dshield

[3] Slingshot Linux: https://www.sans.org/tools/slingshot/

--

Jesse La Grew

Handler

Comments